Welcome to the ProLUG Security Engineering Course Book.

This Book

Contains all materials pertaining to the course including links to external resources. It has been put together with care by a number of ProLUG group members referencing original instructional materials produced by Scott Champine (Het Tanis).

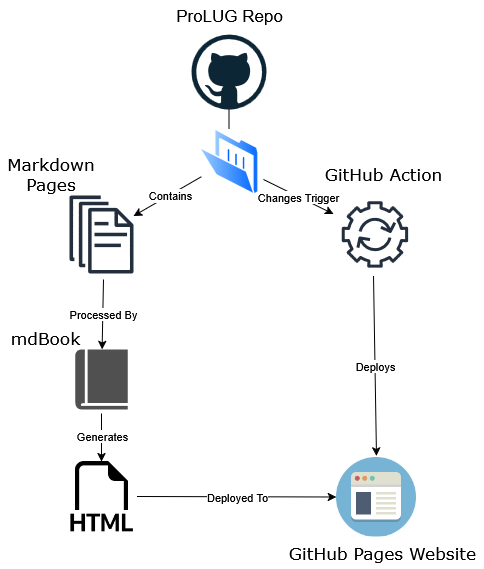

The content is version controlled with Git and stored here: https://github.com/ProfessionalLinuxUsersGroup/psc/

Furthermore, the book has been built with mdbook for ease of navigation. Be sure to try the search functionality.

Course Description

This course addresses how to secure Linux a corporate environment. This course will focus on adhering to regulations, best practices, and industry standards. This course will expose the concepts of controls, their implementation, and how they fit into overall security posture. The learner will practice securely building, deploying, integrating, and monitoring Linux systems. Standard security documentation and reporting will be practiced throughout, to better prepare the learner for the industry.

Prerequisite(s) and/or Corequisite(s):

Prerequisites: None

Credit hours: N/A

Contact hours: 100 (40 Theory Hours, 60 Lab Hours)

Course Summary

Major Instructional Areas

- Build Standards and Compliance

- Securing the Network Connection

- User Access and System Integration

- Bastion Hosts and Air-Gaps

- Updating Systems and Patch Cycles

- Monitoring and Parsing Logs

- Monitoring and Alerting

- Configuration drift and Remediation

- Certificate and Key Madness

Course Objectives

- Build and configure a Linux system to adhere to compliance frameworks

- Integrating Linux to a network in a secure fashion

- Integrating Linux with Enterprise Identity and Access Management (IAM) frameworks

- Implement User ingress controls to a system/network with bastion frameworks

- Updating Linux to resolve security vulnerabilities and reporting out to security teams

- Design logging workflows to move event logging off of systems for real time monitoring

- Monitoring and alerting on events in Linux

- Maintaining system configuration drift and remediation

Written Discussions

Are assigned as 'Discussion Posts' within each unit. Discussions generally take place within the Discord Server under #prolug-projects. More specifically, each unit will contain links to particular discussion posts within #prolug-projects.

Completing the Course

In order to complete this course students must participate in group discussions and complete provided labs. Additionally, students are to propose and complete a final project involving skills learned from the course.

Recommended Tools, Resources, and Frameworks

- Killercoda: https://killercoda.com/

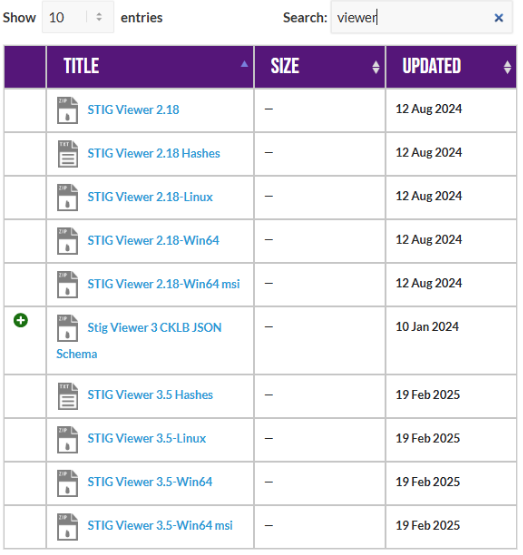

- STIG Resources: https://public.cyber.mil/stigs/srg-stig-tools/

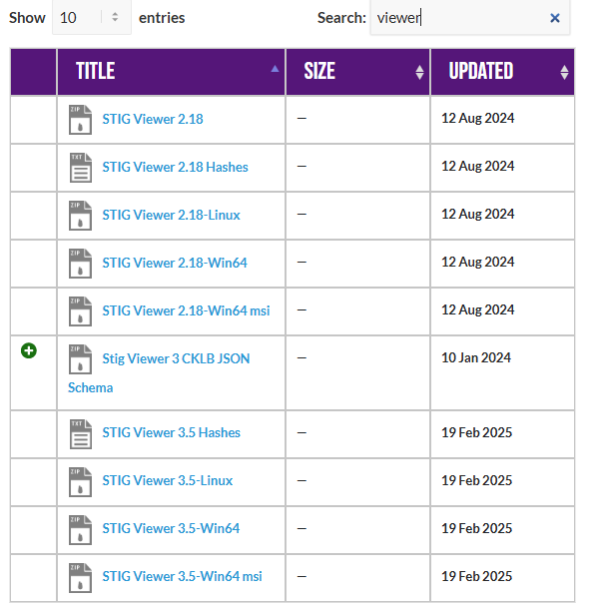

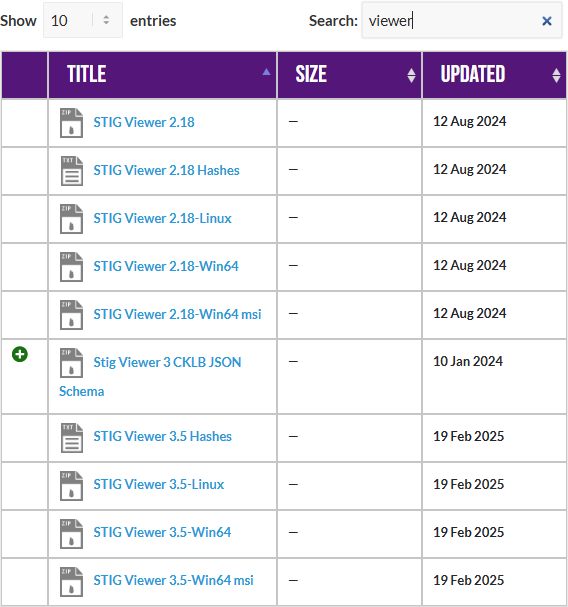

- Recommended (but not required) STIG Viewer: v2.18

- NIST: https://www.nist.gov/

- Open Worldwide Application Security Project Top 10: https://owasp.org/www-project-top-ten/

- CIS Controls and Benchmarks: https://www.cisecurity.org/cis-benchmarks

Required Resources

Option #1 (Killercoda Machine)

Cloud Lab server running Ubuntu on Killercoda.

Minimal resources can accomplish our tasks

- 1 CPU

- 2 GB Ram

- 30 GB Hard Drive

- Network Interface (IP already setup)

Option #2 (Home Lab)

Local VM server running: RHEL, Fedora, Rocky

Minimal resources

- 1 CPU

- 2GB RAM

- Network Interface (Bridged)

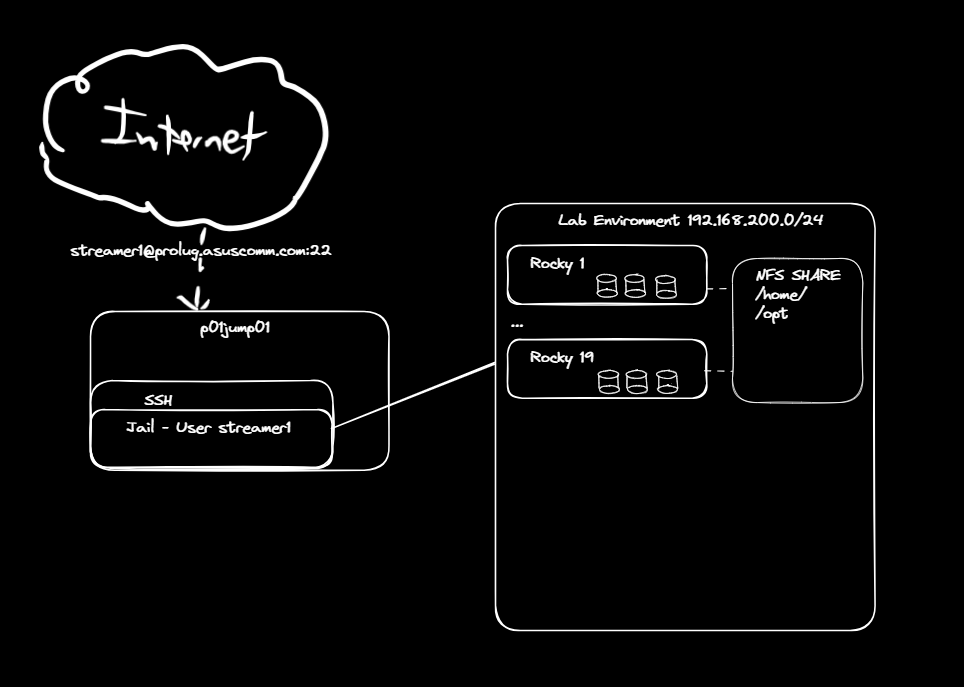

Option #3 (ProLUG Remote Lab)

ProLUG Lab access to Rocky 9.4+ instance.

Minimal resources can accomplish our tasks

- 1 CPU

- 4 GB RAM

- Network Interface (IP already setup)

Course Plan

Instructional Methods

This course is designed to promote learner-centered activities and support the development of Linux security skills. The course utilizes individual and group learning activities, performance-driven assignments, problem-based cases, projects, and discussions. These methods focus on building engaging learning experiences conducive to development of critical knowledge and skills that can be effectively applied in professional contexts.

Class Size

This class will effectively engage 40-60 learners.

Class Schedule

https://discord.com/events/611027490848374811/1353330418669326407

Class will meet over weekend (Brown bag) sessions. 1 time per week, for 10 weeks. There will be a total of 10 sessions.

| Session | Topic |

|---|---|

| 1 | Unit 1 - Build Standards and Compliance |

| 2 | Unit 2 - Securing the network connection |

| 3 | Unit 3 - User Access and system integration |

| 4 | Unit 4 - Bastion hosts and airgaps |

| 5 | Unit 5 - Updating systems and patch cycles |

| 6 | Unit 6 - Monitoring and parsing logs |

| 7 | Unit 7 - Monitoring and alerting |

| 8 | Unit 8 - Configuration drift and remediation |

| 9 | Unit 9 - Certificate and key madness |

| 10 | Unit 10 - Recap and final project |

Suggested Learning Approach

In this course, you will be studying individually and within a group of your peers, primarily in a lab environment. As you work on the course deliverables, you are encouraged to share ideas with your peers and instructor, work collaboratively on projects and team assignments, raise questions, and provide constructive feedback.

Students wishing to complete the Security Engineering course are expected to devise and complete a capstone project, to be turned in at the end of the course.

The instructions, expectations, and deliverables for the project are listed on this page.

Instructions

-

We have picked up a new client. They are requesting we help them adhere to the HIPAA compliance standard. Review an explanation of the standard here:

https://www.hhs.gov/hipaa/for-professionals/security/laws-regulations/index.html- If you are in the EU and want to substitute GDPR, you may do so.

https://gdpr.eu/what-is-gdpr/

- If you are in the EU and want to substitute GDPR, you may do so.

-

Build the documentation for HIPAA Compliance.

- How are we implementing Risk analysis and management?

- What are our safeguards?

- Administrative

- Physical

- Technical

- How do we form Business Associate Agreements

- What are our documentation practices?

- Policies

- Procedures

- Update and review cadence

-

Prepare to Present (https://www.overleaf.com/ is a great alternative to Powerpoint)

- Setup a 15-20 slide deck on what you did

- Project purpose

- Diagram

- Build Process

- What did you learn?

- How are you going to apply this?

- Setup a 15-20 slide deck on what you did

-

Do any of you want to present?

- Let Scott know (@het_tanis) and we’ll get you a slot in the last few weeks.

Deliverables

- A 15-20 slide presentation of the above material that you would present to a group

(presenting to us is voluntary, but definitely possible.)

- This can be done with Microsoft PowerPoint, LibreOffice Impress, or overleaf.com.

Each course run through the Professional Linux Users Group (ProLUG) allows you to earn a certification upon completion.

Certificates are awarded to those who complete the course within the timeframe that it is being run through the ProLUG Discord.

- To see when courses are running, join the ProLUG Discord server and check the Events section.

If you aim to earn the certification for completing this course, you must follow the guidelines set forth in this document.

There are four main components to earning the certification.

Worksheet Completion

Each unit has a corresponding worksheet.

On this worksheet are discussion questions, terms/definitions, optional "digging

deeper" sections, and reflection questions.

These worksheets must be filled out and kept until the end of the course.

Upon reaching the end, they are to be submitted to the instructor (Scott Champine).

Worksheet Submission Format

The format in which you submit these worksheets is up to you.

Some students prefer to keep them in a GitHub repository, others prefer to just keep them as files on their machines and submit via email.

Discussion Questions

Each unit's worksheet contains multiple discussion questions.

Each discussion question has its own thread in the ProLUG Discord server, in the

#course-discussion-posts forum channel.

To qualify for certification, you must:

- Post your answer to each discussion question in the correct thread.

- Respond to another student's answer in the same thread.

The goal of this is not to create busywork, but to spark discussions and see things from other points of view.

Lab Completion

Each unit has a lab that is to be completed.

The labs, like the worksheets, should be also completed and saved until the end of the course.

These labs should be submitted to the instructor along with the worksheets in the same format of your choice.

Final Project

Each ProLUG course has students complete a capstone project.

This is a requirement for earning a ProLUG course certification.

The project must meet the standards set forth in the Final Project Outline (or otherwise be approved by the instructor, Scott Champine).

In the Beginning

Founded approximately 15 years ago, the Professional Linux User Group (ProLUG) began as a vision of Het Tanis, known by his community alias 'Scott Champine.' Het identified the need for an informal yet structured space where Linux professionals could share knowledge, collaborate, and grow together. What started as local in-person meetups quickly gained traction, thanks to the increasing demand for open-source collaboration and the widespread adoption of Linux in both enterprises and personal projects.

Why ProLUG Started

ProLUG was born out of the recognition that Linux professionals often face challenges that are best solved through peer collaboration and hands-on experience. The community’s founding principles were rooted in creating an environment where newcomers could learn from experienced professionals, and seasoned users could gain exposure to advanced topics and emerging technologies. Its core mission was simple yet impactful: to provide continuous growth opportunities in Linux system administration, automation, and cloud technologies.

Some of the key motivations behind ProLUG's formation include:

- Peer Support: Helping members solve technical challenges through discussion and advice from experts.

- Knowledge Sharing: Encouraging open sharing of tips, tricks, configurations, and scripts related to Linux and open-source tools.

- Hands-on Learning: Providing access to practical labs, exercises, and real-world scenarios for hands-on training.

- Community Mentorship: Offering a space for members to mentor and be mentored by others in different stages of their careers.

- Certification Prep: Assisting members in preparing for recognized industry certifications.

The Expansion into an Online Community

While initially focused on local in-person meetings, ProLUG embraced online platforms to extend its reach globally. The switch to a virtual model enabled:

- Global Networking: Professionals and enthusiasts from around the world could now connect, learn, and collaborate without geographical limitations.

- 24/7 Discussion: Via platforms like Discord, members could share insights, discuss Linux problems, and exchange ideas anytime, anywhere.

- Greater Diversity: The online expansion diversified the member base, incorporating individuals from various industries and technical backgrounds, creating a rich environment for problem-solving.

Interactive Labs and Training Programs

One of ProLUG’s most successful expansions has been its focus on interactive, hands-on labs. To bridge the gap between theory and practice, Het Tanis launched a series of labs on platforms like Killercoda, covering a variety of topics including:

- Linux Essentials and System Administration

- Ansible Automation

- Kubernetes and Container Orchestration

- Security and Network Hardening

With over 50 interactive labs available and more being continuously developed, members benefit from practical scenarios that simulate real-world challenges. The labs cater to beginners, intermediates, and experts, ensuring everyone has something to gain.

Certification and Career Development

In 2024, ProLUG launched its first structured certification course: Enterprise Linux Administration. This program was designed to provide a comprehensive curriculum covering topics such as:

- Advanced Linux system configuration

- Enterprise networking and services

- Security management

- Scripting and automation

The first cohort of graduates successfully completed the program in January 2025, marking a major milestone in ProLUG’s commitment to professional development. Many graduates have reported success stories, such as landing new jobs, securing promotions, or gaining confidence in their Linux expertise.

What is a User Group?

A user group is a community of individuals who come together to share common interests, typically in a specific area of technology, such as Linux. These groups can be local or online and serve as platforms for:

- Collaboration: Members work together to troubleshoot, build projects, and share experiences.

- Networking: Opportunities to connect with professionals, mentors, and employers within the field.

- Learning: Workshops, presentations, and discussions that cover new and emerging technologies.

- Career Growth: Access to resources, training programs, and job opportunities.

ProLUG is a prime example of how a user group can grow beyond its initial purpose, evolving into a vibrant global community with practical learning opportunities and real-world outcomes.

Success Stories

Being part of ProLUG has proven highly beneficial for many members, with success stories ranging from career advancements to personal growth:

- Job Opportunities: Members have found jobs in system administration, DevOps, and cloud engineering roles through networking within ProLUG.

- Certifications: Many members have successfully obtained Linux-related certifications, including RHCSA, RHCE, and LFCS, using ProLUG’s resources and mentorship programs.

- Skill Development: Through interactive labs and group discussions, members have honed skills in automation (Ansible), scripting (Bash, Python), containerization (Docker, Kubernetes), and more.

- Mentorship Relationships: Senior professionals have mentored newcomers, creating a cycle of continuous learning and knowledge sharing.

Current Milestones

- 3,000+ Members: ProLUG’s global community continues to grow rapidly, attracting Linux enthusiasts and professionals from various backgrounds.

- 50+ Interactive Labs: Covering diverse topics, from basic Linux administration to advanced enterprise systems management.

- Ongoing Training Programs: Continuous updates to certification preparation courses, interactive workshops, and guided lab exercises.

ProLUG’s commitment to fostering a collaborative environment has made it a go-to community for anyone interested in Linux. Whether you're a beginner looking to learn the basics or an experienced professional aiming to advance your career, ProLUG offers a pathway to success.

Overview

Building standards and compliance in cybersecurity engineering ensures that systems adhere to industry best practices, regulatory requirements, and security frameworks, reducing risks and vulnerabilities.

By implementing structured guidelines through tools and frameworks like STIGs (Security Technical Implementation Guides) and the NIST CS (National Institute of Standards and Technology Cyber Security) framework, organizations can maintain resilience against evolving threats while ensuring accountability and regulatory alignment.

This chapter will present critical knowledge in implementing security controls in information systems.

Learning Objectives

By the end of Unit 1 students will have foundational knowledge and skills of the concepts below:

- Security Frameworks such as STIGs, CIS Controls, NIST Cybersecurity Framework

- Regulatory Compliance and Industry Standards when administering and building systems

- Skills and concepts in interacting with STIG remediation processes

- Understanding Risk Management and concepts surrounding risk vectors to organizations

- STIG Remediation and documentation skills

Relevance & Context

As the shepherds of sensitive data and systems, it is the ethical and legal duty of individuals that administer and build these systems to protect them from malicious actors with no regard for propriety. To be successful in securing systems students will need to thoroughly understand the cybersecurity landscape, its myriad potential threats, and the tools engineers and administrators have at their disposal.

The concepts presented in this unit play a pivotal role in organizing and structuring a resilient security posture against threats to enterprise and organizational entities. They provide processes and procedures that engineers and administrators can implement to significantly reduce the attack surface of the systems they administer along with building a system of logging and documentation in the eventuality of a security incident.

By thoroughly understanding these concepts students will be armed with a set of tools in the eternal and ever evolving landscape of cybersecurity.

Prerequisites

Students should have a strong understanding of such skills as presented in the Linux Administration Course including:

- The Command Line Interface and BASH shell skills

- Installing and Updating Linux System Packages

- Interacting with command line tools such as:

systemctl,mount,grep, andss - Ability to interact with basic SQL queries using MariaDB

- Students will need to download the latest STIG viewer, v2.18

Key terms and Definitions

CIA Triad

Regulatory Compliance

HIPAA

Industry Standards

PCI/DSS

Security Frameworks

CIS

STIG

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://public.cyber.mil/stigs/downloads

- https://excalidraw.com

- https://www.open-scap.org

- https://www.sans.org/information-security-policy

- https://www.sans.org/blog/the-ultimate-list-of-sans-cheat-sheets

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 1 Recording

Discussion Post #1

The first question of this course is, "What is Security?"

- Describe the CIA Triad.

- What is the relationship between Authority, Will, and Force as they relate to security?

- What are the types of controls and how do they relate to the above question?

Discussion Post #2

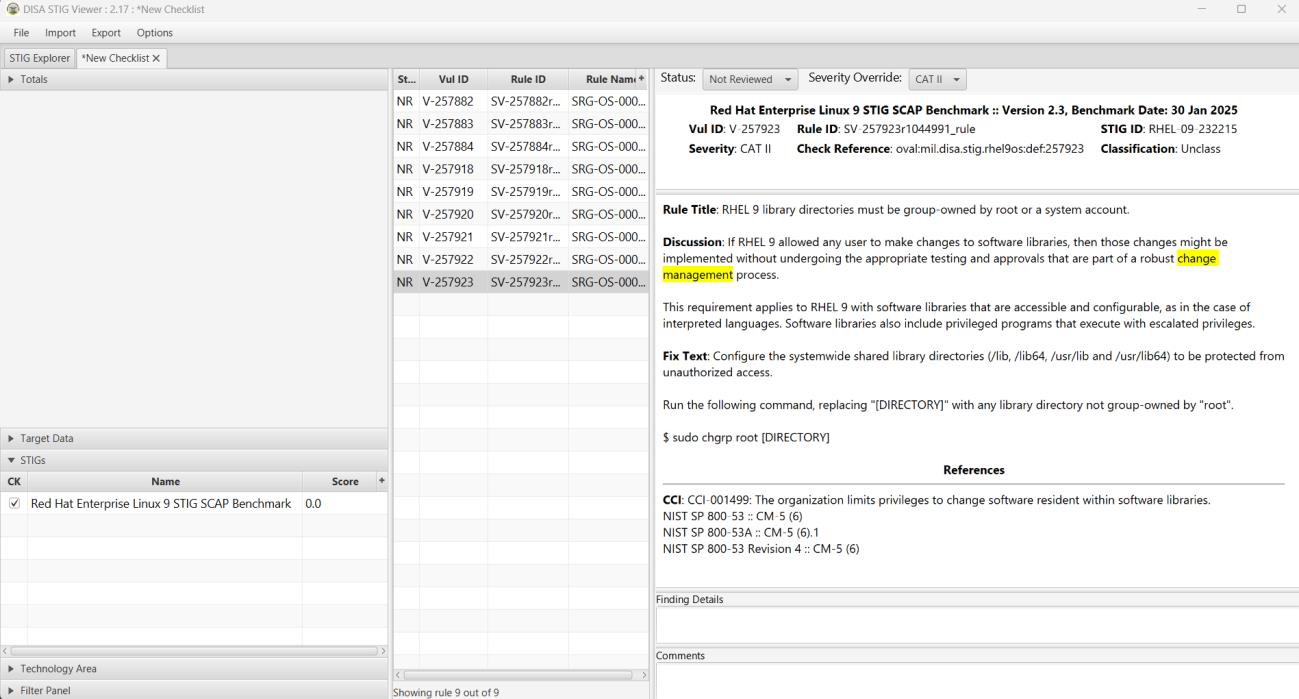

Find a STIG or compliance requirement that you do not agree is necessary for a server or service build.

- What is the STIG or compliance requirement trying to do?

- What category and type of control is it?

- Defend why you think it is not necessary. (What type of defenses do you think you could present?)

Submit your input by following the link below.

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

CIA Triad:

Regulatory Compliance:

HIPAA:

Industry Standards:

PCI/DSS:

Security Frameworks:

CIS:

STIG:

Digging Deeper

-

Research a risk management framework. https://csrc.nist.gov/projects/risk-management/about-rmf

- What are the areas of concern for risk management?

-

Research the difference between quantitative and qualitative risks.

- Why might you use one or the other?

-

Research ALE, SLE, and ARO.

- What are these terms in relation to?

- How do these help in the risk discussion?

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/ )

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Module 1: Exploring System Information

Exercise 1.1: Familiarizing ourselves with the System

mount | grep -i noexec

mount | grep -i nodev

mount | grep -i nosuid

# Approximately how many of your mounted filesystems have each of these values?

Exercise 1.2: Checking Mounted Systems

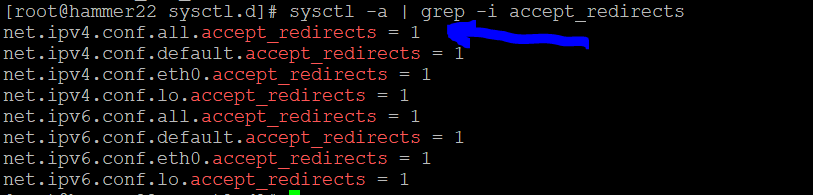

sysctl -a | grep -i ipv4

sysctl -a | grep -i ipv6

# How many of each are there?

sysctl -a | grep -i ipv4 | grep -i forward

# Does IPv4 forward on interfaces?

lsmod | grep -i tables

# What type of tables exist?

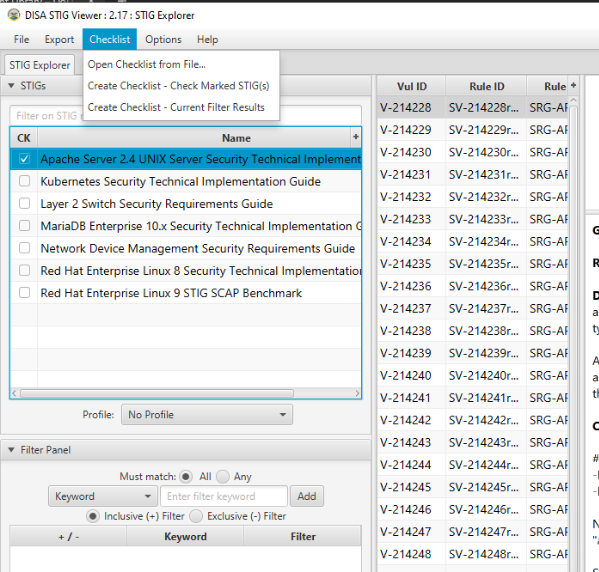

Module 2: PreLAB

-

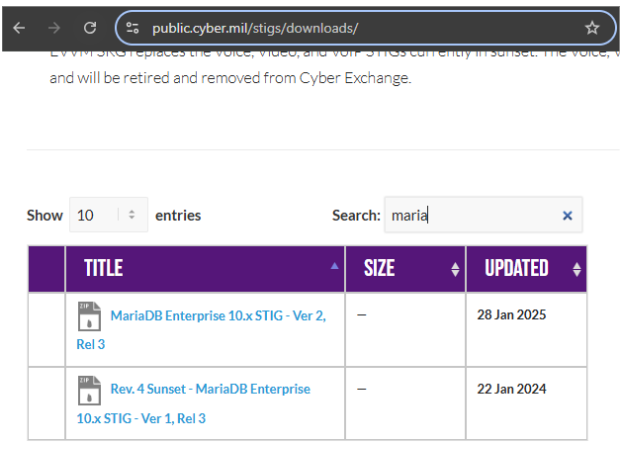

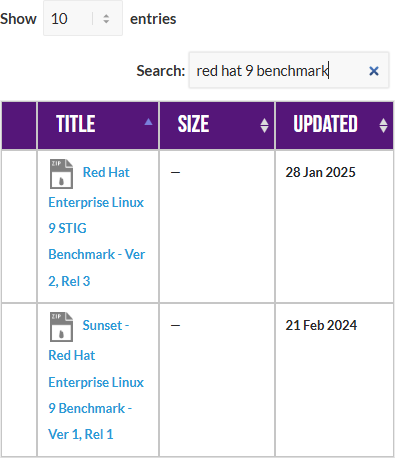

Download the STIG Viewer 2.18 from - https://public.cyber.mil/stigs/downloads/

-

Download the STIG for Mariadb and the import it into your STIG viewer.

Module 3: Lab

This lab is designed to have the engineer practice securing a Linux server or service

against a set of configuration standards.

These standards are sometimes called benchmarks, checklists, or guidelines.

The engineer will be using STIG Viewer 2.18 to complete this lab.

MariaDB Service configuration:

- Connect to a hammer server.

- Install MariaDB.

dnf install mariadb-server

# Ensure that it is running

systemctl start mariadb

systemctl status mariadb

ss -ntulp | grep 3306

-

Check and remediate v-253666 STIG.

- What is the problem?

- What is the fix?

- What type of control is being implemented?

- Is it set properly on your system?

Connect to MariaDB locally.

mysqlRun the SQL command in the STIG's Fix Text section:

SELECT user, max_user_connections FROM mysql.user;Can you remediate this finding?

-

Check and remediate

v-253677 STIG- What is the problem?

- What is the fix?

- What type of control is being implemented?

- Is it set properly on your system?

-

Check and remediate

v-253678 STIG- What is the problem?

- What is the fix?

- What type of control is being implemented?

- Is it set properly on your system?

-

Check and remediate

v-253734 STIG- What is the problem?

- What is the fix?

- What type of control is being implemented?

- Is it set properly on your system?

Be sure to

rebootthe lab machine from the command line when you are done.

Overview

Understanding and implementing network standards and compliance measures can make security controls of critical importance very effective.

This unit introduces foundational knowledge on analyzing, configuring, and hardening networking components using tools and frameworks like STIGs, OpenSCAP, and DNS configurations.

Learning Objectives

By the end of Unit 2 students will have foundational knowledge and skills of the concepts below:

- Identifying and analyzing STIGs related to Linux networking.

- Understand and configure secure name resolution using nsswitch.conf and DNS.

- Utilizing tools like tcpdump, ngrep, ss, and netstat to monitor network behavior.

- Applying OpenSCAP and SCC tools for network compliance assessments.

- Exploring known network-based exploits and understanding their anatomy via the Diamond Model of Intrusion Analysis.

Relevance and Context

Networks represent one of the most common attack vectors in enterprise systems. Misconfigured name resolution, open ports, and insecure protocols are all doorways to intrusion. As system engineers, building resilient systems requires a deep understanding of how data flows through these pathways and what tools can monitor and secure them.

By learning to assess and remediate network-related STIGs and implementing structured standards, students will gain the skills to reduce ingress risk and respond effectively to threats. These skills are not only crucial for compliance but also for real-world defense.

Prerequisites

To be successful, students should have a working understanding of skills and tools including:

- The Command Line Interface and BASH shell skills

- Installing and Updating Linux System Packages

- Network concepts including TCP/IP, DNS, and more

- Interacting with command line tools such as:

sysctl,firewalld,grep, andoscap - Ability to edit files with

vim - Students will need to download the latest STIG viewer, v2.18

Key Terms and Definitions

sysctl

nsswitch.conf

DNS

Openscap

CIS Benchmarks

ss/netstat

tcpdump

ngrep

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://www.sans.org/information-security-policy/

- https://www.sans.org/blog/the-ultimate-list-of-sans-cheat-sheets/

- https://docs.rockylinux.org/gemstones/core/view_kernel_conf/

- https://ciq.com/blog/demystifying-and-troubleshooting-name-resolution-in-rocky-linux/

- https://www.activeresponse.org/wp-content/uploads/2013/07/diamond.pdf

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 2 Recording

Discussion Post #1

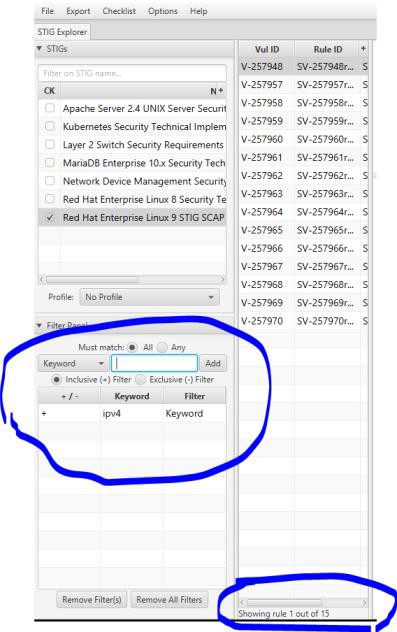

There are 401 stigs for RHEL 9. If you filter in your STIG viewer for

sysctl there are 33 (mostly network focused), ssh - 39, and network - 58. Now there are

some overlaps between those, but review them and answer these questions

- As systems engineers why are we focused on protecting the network portion of our server builds?

- Why is it important to understand all the possible ingress points to our servers that

exist?

- Why is it so important to understand the behaviors of processes that are connecting on those ingress points?

Discussion Post #2

Read this: https://ciq.com/blog/demystifying-and-troubleshooting-name-resolution-in-rocky-linux/ or similar blogs on DNS and host file configurations.

-

What is the significance of the nsswitch.conf file?

-

What are security problems associated with DNS and common exploits? (May have to look into some more blogs or posts for this)

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

sysctl:

nsswitch.conf:

DNS:

Openscap:

CIS Benchmarks:

ss/netstat:

tcpdump:

ngrep:

Digging Deeper

- See if you can find any DNS exploits that have been used and written up in the diamond model of intrusion analysis format. If you can, what are the primary actors and actions that made up the attack?

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/)

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .docx

could be transposed to a .md file.

Pre-Lab Warm-Up

EXERCISES (Warmup to quickly run through your system and familiarize yourself)

sysctl -a | grep -i ipv4 | grep -i forward

# Does this system appear to be set to forward? Why or why not?

sysctl -a | grep -i ipv4 | grep -i martian

# What are martians and is this system allowing them?

sysctl -a | grep -i panic

# How does this system handle panics?

sysctl -a | grep -i crypto

# What are the settings you see? Is FIPS enabled?

cat /proc/cmdline

fips-mode-setup --check

sestatus

cat /etc/selinux/config

What information about the security posture of the system can you see here?

Can you verify SELINUX status?

Can you verify FIPS status?

Download the STIG Viewer 2.18 from - https://public.cyber.mil/stigs/downloads/

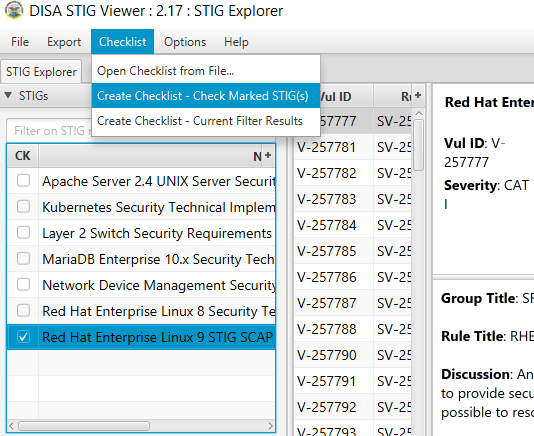

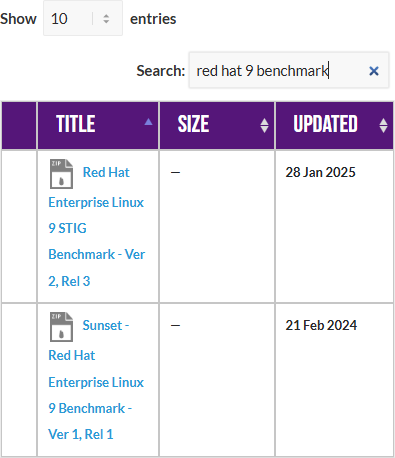

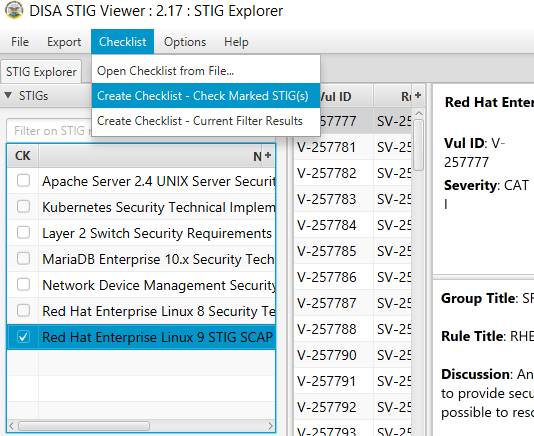

Download the STIG for RHEL 9 and the import it into your STIG viewer

Create a checklist from the opened STIG for RHEL 9

Lab 🧪

This lab is designed to have the engineer practice securing a Linux server or service against a set of configuration standards. These standards are sometimes called benchmarks, checklists, or guidelines. The engineer will be using STIG Viewer 2.18 to complete this lab.

Network Service configuration

Connect to a hammer server

Filter by ipv4 and see how many STIGs you have.

Examine STIG V-257957

What is the problem?

What is the fix?

What type of control is being implemented?

Is it set properly on your system?

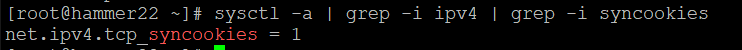

sysctl -a | grep -i ipv4 | grep -i syncookies

Can you remediate this finding?

In this case it's already correctly set.

But if we needed to, we would set that value in /etc/sysctl.d/00- remediate.conf

And then reload sysctl with sysctl --system

Check and remediate V-257958 STIG

What is the problem?

What is the fix?

What type of control is being implemented?

Is it set properly on your system?

How would you go about remediating this on your system?

Check and remediate V-257960 and V-257961 STIGs

What is the problem? How are they related?

What is the fix?

What type of control is being implemented?

Is it set properly on your system?

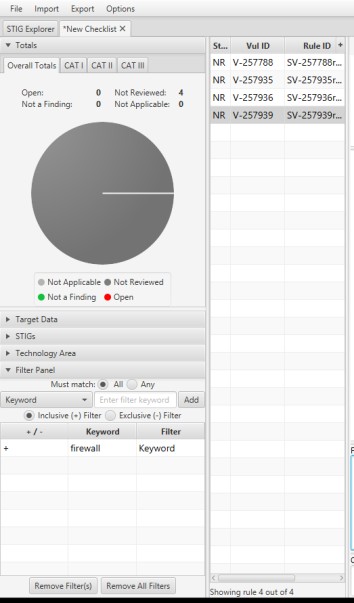

Filter by firewall

How many STIGS do you see?

What do these STIGS appear to be trying to do? What types of controls are they?

Firewall port exposure

Scenario:

Your team needs to use node_exporter with Prometheus to allow scraping of system information back to your network monitoring solution. You are running a firewall, so you need to expose the port that node_exporter runs on to the network outside of your system.

Expose a network port through your firewall

# Verify that your firewall is running

systemctl status firewalld

# Verify that your firewall has the service defined

firewall-cmd --get-services | grep -i node

ls /usr/lib/firewalld/services | grep -i node

# Verify that the service is not currently enabled for node_exporter

firewall-cmd --list-services

# Examine the structure of the firewall .xml file

cat /usr/lib/firewalld/services/prometheus-node-exporter.xml

# Enable the service through your firewall

firewall-cmd --permanent --add-service=prometheus-node-exporter

# Reload so the changes take effect

firewall-cmd --reload

# Verify that the service is currently enabled for node_exporter

firewall-cmd --list-services

Automate STIG remediation on a system

There are many options and the STIG remediation steps are well known. Here the learner will examine a few ways to generate Ansible and Shell fixes to your system. Then one can apply all of them, or just some of them. This is the real value of a security engineer focused Linux engineer, the trade-off between security and productivity.

Download and extract a STIG remediation tool

/labs folder on the server for a [course]_[unit#].zip file to complete the activities.

cd /root

mkdir stigs

cd stigs

wget -O U_RHEL_9_V2R4_STIG_Ansible.zip https://dl.dod.cyber.mil/wp-content/uploads/stigs/zip/U_RHEL_9_V2R4_STIG_Ansible.zip

unzip U_RHEL_9_V2R4_STIG_Ansible.zip

mkdir ansible

cp rhel9STIG-ansible.zip ansible/

cd ansible

unzip rhel9STIG-ansible.zip

Examine the default values for STIGS

cd /root/stigs/ansible/roles/rhel9STIG/defaults/

vim main.yml

Search for a few of the STIG numbers you used earlier and see their default values.

- use /257784 to search

Examine the playbook to see how those are applied in a running system.

vim /root/stigs/ansible/roles/rhel9STIG/tasks/main.yml

- use /257784 to search for the STIG from above and see how it is fixed in the playbook.

Create an Ansible playbook from OpenSCAP

dnf -y install openscap-scanner openscap-utils openscap-scanner scap-security-guide

cd /root

mkdir openscap

cd openscap

# Generate the Ansible

oscap xccdf generate fix --profile ospp --fix-type ansible /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml > draft-disa-remediate.yml

# Examine the file

vim draft-disa-remediate.yml

# Generate a BASH version

oscap xccdf generate fix --profile ospp --fix-type bash /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml > draft-disa-remediate.sh

# Examine the file

vim draf-disa-remediate.sh

Be sure to

rebootthe lab machine from the command line when you are done.

Overview

User access in an larger organizations requires more sophisticated controls. For this purpose Active Directory (AD) and Lightweight Directory Access Protocol (LDAP) have become popular choices as they offer more sophisticated and robust ways of controlling access. In this chapter, you will learn why AD and LDAP are popular choices.

Learning Objectives

- Understand how LDAP or AD works and why it is beneficial.

- High level understanding of hardening Rocky Linux, a RHEL adjacent distro.

- Gain a basic understanding of PAM.

Relevance and Context

In enterprise environments, managing user identities and system access at scale is critical for security, compliance, and operational efficiency. Lightweight Directory Access Protocol (LDAP) and Active Directory (AD) provide centralized authentication, authorization, and account management capabilities that far surpass local account management methods.

Understanding these systems is foundational for administrators working with Rocky Linux, a Red Hat Enterprise Linux (RHEL) derivative, especially when implementing compliance standards such as DISA STIGs or CIS Benchmarks. Mastering integration points like PAM (Pluggable Authentication Modules) and services like sssd allows administrators to ensure secure and scalable authentication across diverse systems.

Prerequisites

To be successful, students should have a working understanding of skills and tools including:

- Basic Directory navigation.

- Knowledge of editing config files.

- Basic knowledge of StigViewer.

- Understanding of SystemD services and the SysCTL command.

Key Terms and Definitions

PAM

AD

LDAP

sssd

oddjob

krb5

realm/realmd

wheel (system group in RHEL)

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://www.sans.org/information-security-policy/

- https://www.sans.org/blog/the-ultimate-list-of-sans-cheat-sheets/

- https://docs.rockylinux.org/guides/security/pam/

- https://docs.rockylinux.org/guides/security/authentication/active_directory_authentication/

- https://docs.rockylinux.org/books/admin_guide/06-users/

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 3 Recording

Discussion Post #1

There are 16 Stigs that involve PAM for RHEL 9. Read the guide from Rocky Linux here: https://docs.rockylinux.org/guides/security/pam/

- What are the mechanisms and how do they affect PAM functionality?

- Review

/etc/pam.d/sshdon a Linux system.

What is happening in that file relative to these functionalities?

- Review

- What are the common PAM modules?

- Review

/etc/pam.d/sshdon a Linux system.

What is happening in that file relative to these functionalities?

- Review

- Look for a blog post or article about PAM that discusses real world application.

Post it here and give us a quick synopsis. (Bonus arbitrary points if you find one of our ProLUG members blogs on the subject.)

Discussion Post #2

Read about active directory (or LDAP) configurations of Linux via sssd here:

https://docs.rockylinux.org/guides/security/authentication/active_directory_authentication

- Why do we not want to just use local authentication in Linux? Or really any system?

- There are 4 SSSD STIGS.

- What are they?

- What do they seek to do with the system?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

PAM:

AD:

LDAP:

sssd:

oddjob:

krb5:

realm/realmd:

wheel (system group in RHEL):

Digging Deeper

- How does

/etc/security/access.confcome into play with pam_access? Read up on it here: https://man7.org/linux/man-pages/man8/pam_access.8.html- Can you find any other good resources?

- What is the structure of the access.conf file directives?

- What other important user access or user management information do you learn by

reading this? https://docs.rockylinux.org/books/admin_guide/06-users/

- What is the contents of the

/etc/login.defsfile? Why do you care?

- What is the contents of the

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/ )

Download the STIG for RHEL 9 and the import it into your STIG viewer

Create a checklist from the opened STIG for RHEL 9

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .docx

could be transposed to a .md file.

EXERCISES (Warmup to quickly run through your system and familiarize yourself)

ls -l /etc/pam.d/

# What are the permissions and names of files? Can everyone read them?

cat /etc/pam.d/sshd

# What information do you see in this file?

# Does any of it look familiar to you?

Pre-Lab Warm-Up

Download the STIG Viewer 2.18 from - https://public.cyber.mil/stigs/downloads/

Download the STIG for RHEL 9 and the import it into your STIG viewer

Create a checklist from the opened STIG for RHEL 9

Lab 🧪

This lab is designed to have the engineer practice securing a Linux server or service against a set of configuration standards. These standards are sometimes called benchmarks, checklists, or guidelines. The engineer will be using STIG Viewer 2.18 to complete this lab.

PAM configuration

Connect to a hammer server

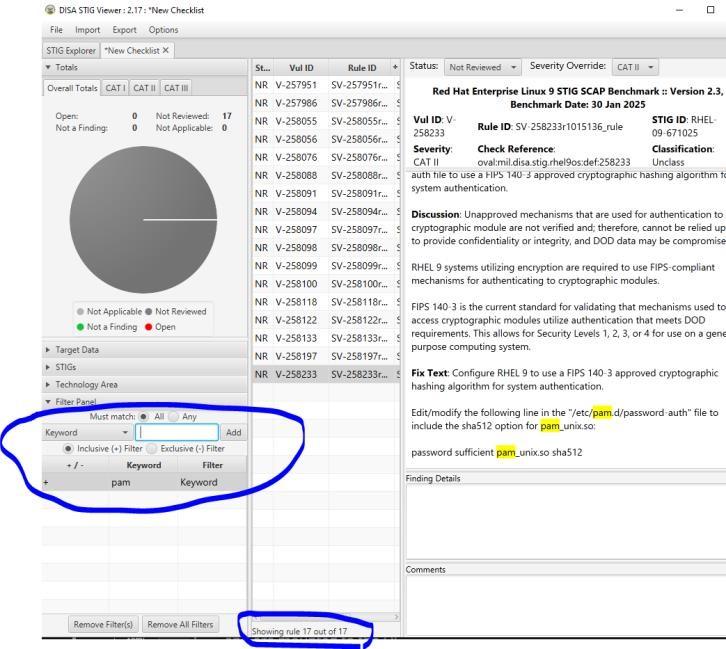

Filter by pam and see how many STIGS you have. (Why is it really only 16?)

Examine STIG V-257986

What is the problem?

What is the fix?

What type of control is being implemented?

Is it set properly on your system?

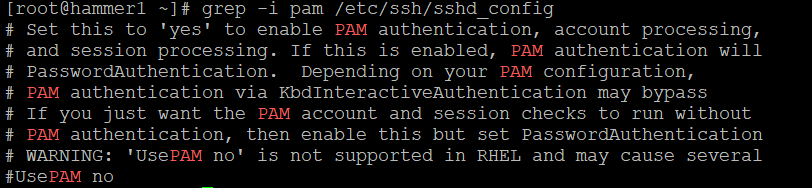

grep -i pam /etc/ssh/sshd_config

Can you remediate this finding?

Check and remediate STIG V-258055

What is the problem?

What is the fix?

What type of control is being implemented?

Are there any major implications to think about with this change on your system? Why or why not?

Is it set properly on your system?

How would you go about remediating this on your system?

Check and remediate STIG V-258098

What is the problem?

What is the fix?

What type of control is being implemented?

Is it set properly on your system?

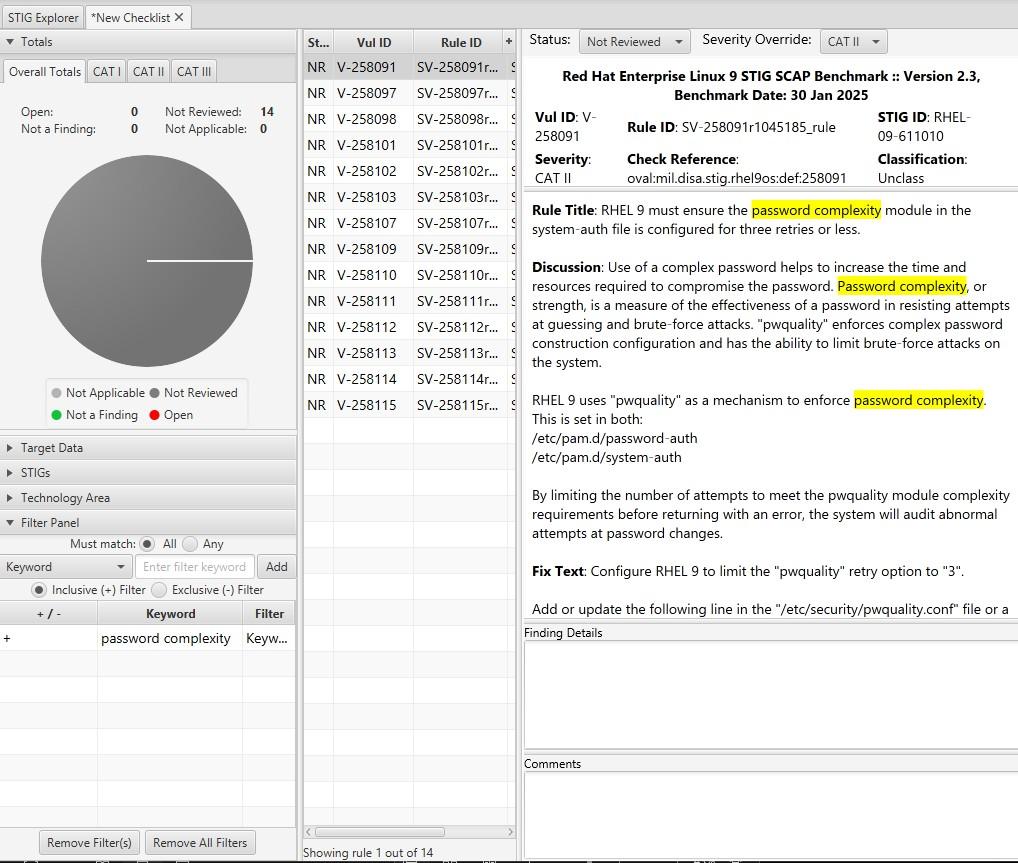

Filter by "password complexity"

How many are there?

What are the password complexity rules?

Are there any you haven't seen before?

Filter by sssd

How many STIGS do you see?

What do these STIGS appear to be trying to do? What types of controls are they?

OpenLDAP Setup

You will likely not build an LDAP server in a real world environment. We are doing it for understanding and ability to complete the lab. In a normal corporate environment this is likely Active Directory.

To simplify some of the typing in this lab, there is a file located at

/lab_work/identity_and_access_management.tar.gz that you can pull down to your system with the correct .ldif files.

[root@hammer1 ~]# cp /lab_work/identity_and_access_management.tar.gz .

[root@hammer1 ~]# tar -xzvf identity_and_access_management.tar.gz

Install and configure OpenLDAP

1. Stop the warewulf client

[root@hammer1 ~]# systemctl stop wwclient

2. Edit your /etc/hosts file

Look for and edit the line that has your current server

[root@hammer1 ~]# vi /etc/hosts

Entry for hammer1 for example:

192.168.200.151 hammer1 hammer1-default ldap.prolug.lan ldap

3. Setup dnf repo

[root@hammer1 ~]# dnf config-manager --set-enabled plus

[root@hammer1 ~]# dnf repolist

[root@hammer1 ~]# dnf -y install openldap-servers openldap-clients openldap

4. Start slapd systemctl

[root@hammer1 ~]# systemctl start slapd

[root@hammer1 ~]# ss -ntulp | grep slapd

5. Allow ldap through the firewall

[root@hammer1 ~]# firewall-cmd --add-service={ldap,ldaps} --permanent

[root@hammer1 ~]# firewall-cmd --reload

[root@hammer1 ~]# firewall-cmd --list-all

6. Generate a password (Our example uses testpassword) This will return a salted SSHA password. Save this password and salted hash for later input

[root@hammer1 ~]# slappasswd

Output:

New password:

Re-enter new password:

{SSHA}wpRvODvIC/EPYf2GqHUlQMDdsFIW5yig

7. Change the password

[root@hammer1 ~]# vi changerootpass.ldif

dn: olcDatabase={0}config,cn=config

changetype: modify

replace: olcRootPW

olcRootPW: {SSHA}vKobSZO1HDGxp2OElzli/xfAzY4jSDMZ

[root@hammer1 ~]# ldapadd -Y EXTERNAL -H ldapi:/// -f changerootpass.ldif

Output:

SASL/EXTERNAL authentication started

SASL username: gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth

SASL SSF: 0

modifying entry "olcDatabase={0}config,cn=config"

8. Generate basic schemas

ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/cosine.ldif

ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/nis.ldif

ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/inetorgperson.ldif

9. Set up the domain (USE THE PASSWORD YOU GENERATED EARLIER)

[root@hammer1 ~]# vi setdomain.ldif

dn: olcDatabase={1}monitor,cn=config

changetype: modify

replace: olcAccess

olcAccess: {0}to * by dn.base="gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth"

read by dn.base="cn=Manager,dc=prolug,dc=lan" read by * none

dn: olcDatabase={2}mdb,cn=config

changetype: modify

replace: olcSuffix

olcSuffix: dc=prolug,dc=lan

dn: olcDatabase={2}mdb,cn=config

changetype: modify

replace: olcRootDN

olcRootDN: cn=Manager,dc=prolug,dc=lan

dn: olcDatabase={2}mdb,cn=config

changetype: modify

add: olcRootPW

olcRootPW: {SSHA}s4x6uAxcAPZN/4e3pGnU7UEIiADY0/Ob

dn: olcDatabase={2}mdb,cn=config

changetype: modify

add: olcAccess

olcAccess: {0}to attrs=userPassword,shadowLastChange by

dn="cn=Manager,dc=prolug,dc=lan" write by anonymous auth by self write by * none

olcAccess: {1}to dn.base="" by * read

olcAccess: {2}to * by dn="cn=Manager,dc=prolug,dc=lan" write by * read

10. Run it

[root@hammer1 ~]# ldapmodify -Y EXTERNAL -H ldapi:/// -f setdomain.ldif

Output:

SASL/EXTERNAL authentication started

SASL username: gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth

SASL SSF: 0

modifying entry "olcDatabase={1}monitor,cn=config"

modifying entry "olcDatabase={2}mdb,cn=config"

modifying entry "olcDatabase={2}mdb,cn=config"

modifying entry "olcDatabase={2}mdb,cn=config"

modifying entry "olcDatabase={2}mdb,cn=config"

11. Search and verify the domain is working.

[root@hammer1 ~]# ldapsearch -H ldap:// -x -s base -b "" -LLL "namingContexts"

Output:

dn:

namingContexts: dc=prolug,dc=lan

12. Add the base group and organization.

[root@hammer1 ~]# vi addou.ldif

dn: dc=prolug,dc=lan

objectClass: top

objectClass: dcObject

objectclass: organization

o: My prolug Organisation

dc: prolug

dn: cn=Manager,dc=prolug,dc=lan

objectClass: organizationalRole

cn: Manager

description: OpenLDAP Manager

dn: ou=People,dc=prolug,dc=lan

objectClass: organizationalUnit

ou: People

dn: ou=Group,dc=prolug,dc=lan

objectClass: organizationalUnit

ou: Group

[root@hammer1 ~]# ldapadd -x -D cn=Manager,dc=prolug,dc=lan -W -f addou.ldif

13. Verifying

[root@hammer1 ~]# ldapsearch -H ldap:// -x -s base -b "" -LLL "+"

[root@hammer1 ~]# ldapsearch -x -b "dc=prolug,dc=lan" ou

14. Add a user

Generate a password (use testuser1234)

[root@hammer1 ~]# slappasswd

[root@hammer1 ~]# vi adduser.ldif

dn: uid=testuser,ou=People,dc=prolug,dc=lan

objectClass: inetOrgPerson

objectClass: posixAccount

objectClass: shadowAccount

cn: testuser

sn: temp

userPassword: {SSHA}yb6e0ICSdlZaMef3zizvysEzXRGozQOK

loginShell: /bin/bash

uidNumber: 15000

gidNumber: 15000

homeDirectory: /home/testuser

shadowLastChange: 0

shadowMax: 0

shadowWarning: 0

dn: cn=testuser,ou=Group,dc=prolug,dc=lan

objectClass: posixGroup

cn: testuser

gidNumber: 15000

memberUid: testuser

[root@hammer1 ~]# ldapadd -x -D cn=Manager,dc=prolug,dc=lan -W -f adduser.ldif

16. Verify that your user is in the system.

[root@hammer1 ~]# ldapsearch -x -b "ou=People,dc=prolug,dc=lan"

17. Secure the system with TLS (accept all defaults)

[root@hammer1 ~]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/pki/tls/ldapserver.key -out /etc/pki/tls/ldapserver.crt

[root@hammer1 ~]# chown ldap:ldap /etc/pki/tls/{ldapserver.crt,ldapserver.key}

[root@hammer1 ~]# ls -l /etc/pki/tls/ldap*

Output:

-rw-r--r--. 1 ldap ldap 1224 Apr 12 18:23 /etc/pki/tls/ldapserver.crt

-rw-------. 1 ldap ldap 1704 Apr 12 18:22 /etc/pki/tls/ldapserver.key

[root@hammer1 ~]# vi tls.ldif

dn: cn=config

changetype: modify

add: olcTLSCACertificateFile

olcTLSCACertificateFile: /etc/pki/tls/ldapserver.crt

add: olcTLSCertificateKeyFile

olcTLSCertificateKeyFile: /etc/pki/tls/ldapserver.key

add: olcTLSCertificateFile

olcTLSCertificateFile: /etc/pki/tls/ldapserver.crt

[root@hammer1 ~]# ldapadd -Y EXTERNAL -H ldapi:/// -f tls.ldif

18. Fix the /etc/openldap/ldap.conf to allow for certs

[root@hammer1 ~]# vi /etc/openldap/ldap.conf

#

# LDAP Defaults

#

# See ldap.conf(5) for details

# This file should be world readable but not world writable.

#BASE dc=example,dc=com

#URI ldap://ldap.example.com ldap://ldap-master.example.com:666

#SIZELIMIT 12

#TIMELIMIT 15

#DEREF never

# When no CA certificates are specified the Shared System Certificates

# are in use. In order to have these available along with the ones specified # by TLS_CACERTDIR one has to include them explicitly:

TLS_CACERT /etc/pki/tls/ldapserver.crt

TLS_REQCERT never

# System-wide Crypto Policies provide up to date cipher suite which should

# be used unless one needs a finer grinded selection of ciphers. Hence, the

# PROFILE=SYSTEM value represents the default behavior which is in place

# when no explicit setting is used. (see openssl-ciphers(1) for more info)

#TLS_CIPHER_SUITE PROFILE=SYSTEM

# Turning this off breaks GSSAPI used with krb5 when rdns = false

SASL_NOCANON on

[root@hammer1 ~]# systemctl restart slapd

SSSD Configuration and Realmd join to LDAP

SSSD can connect a server to a trusted LDAP system and authenticate users for access to local resources. You will likely do this during your career and it is a valuable skill to work with.

1. Install sssd, configure, and validate that the user is seen by the system

[root@hammer1 ~]# dnf install openldap-clients sssd sssd-ldap oddjob-mkhomedir authselect

[root@hammer1 ~]# authselect select sssd with-mkhomedir --force

[root@hammer1 ~]# systemctl enable --now oddjobd.service

[root@hammer1 ~]# systemctl status oddjobd.service

2. Uncomment and fix the lines in /etc/openldap/ldap.conf

[root@hammer1 ~]# vi /etc/openldap/ldap.conf

Output:

BASE dc=prolug,dc=lan

URI ldap://ldap.ldap.lan/

3. Edit the sssd.conf file

[root@hammer1 ~]# vi /etc/sssd/sssd.conf

[domain/default]

id_provider = ldap

autofs_provider = ldap

auth_provider = ldap

chpass_provider = ldap

ldap_uri = ldap://ldap.prolug.lan/

ldap_search_base = dc=prolug,dc=lan

#ldap_id_use_start_tls = True

#ldap_tls_cacertdir = /etc/openldap/certs

cache_credentials = True

#ldap_tls_reqcert = allow

[sssd]

services = nss, pam, autofs

domains = default

[nss]

homedir_substring = /home

[root@hammer1 ~]# chmod 0600 /etc/sssd/sssd.conf

[root@hammer1 ~]# systemctl start sssd

[root@hammer1 ~]# systemctl status sssd

4. Validate that the user can be seen

[root@hammer1 ~]# id testuser

Output:

uid=15000(testuser) gid=15000 groups=15000

Congratulations! Look at you, doing all the Linux.

Please reboot the the lab machine when done.

[root@hammer1 ~]# reboot

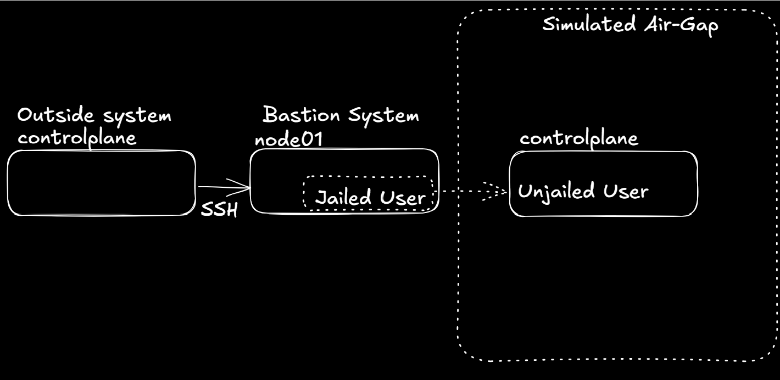

Overview

Bastions and airgaps are strategies for controlling how systems connect—or don't connect—to the outside world. They focus on limiting exposure, creating strong boundaries that support a broader security design. In this unit, we look at how we can seperate systems and create safe disconnects should a problem arise.

Learning Objectives

- Understand the role and importance of air-gapped systems.

- Recognize how to balance strong security with operational efficiency.

- Learn how bastion hosts can help control and limit system access.

- Understand methods for automating the jailing and restriction of users.

- Gain a foundational understanding of

chrootenvironments and diversion techniques.

Relevance and Context

As organizations grow, protecting critical systems becomes more challenging. Air-gapped systems and bastion hosts offer proven ways to limit exposure and manage access securely. Understanding these concepts is essential for building strong security foundations without creating unnecessary barriers to operations.

Prerequisites

To be successful, students should have a working understanding of skills and tools including:

- Basic directory navigation skills.

- Ability to edit and manage configuration files.

- Understanding of SystemD services and the use of the

sysctlcommand. - Basic knowledge of Bash scripting.

Key Terms and Definitions

Air-gapped

Bastion

Jailed process

Isolation

Ingress

Egress

Exfiltration

Cgroups

Namespaces

- Mount

- PID

- IPC

- UTS

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://www.sans.org/information-security-policy/

- https://www.sans.org/blog/the-ultimate-list-of-sans-cheat-sheets/

- https://aws.amazon.com/search/?searchQuery=air+gapped#facet_type=blogs&page=1

- https://aws.amazon.com/blogs/security/tag/bastion-host/

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 4 Recording

Discussion Post #1

Review some of the blogs here:

Or find some on your own about air-gapped systems.

- What seems to be the theme of air-gapped systems?

- What seems to be their purpose?

- If you use google, or an AI, what are some of the common themes that come up when asked about air-gapped or bastion systems?

Discussion Post #2

Do a Google or AI search of topics around jailing a user or processes in Linux.

- Can you enumerate the methods of jailing users?

- Can you think of when you've been jailed as a Linux user?

If not, can you think of the useful ways to use a jail?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

Air-gapped

Bastion

Jailed process

Isolation

Ingress

Egress

Exfiltration

Cgroups

Namespaces

- Mount

- PID

- IPC

- UTS

Digging Deeper

-

While this isn't, strictly speaking, an automation course there is some value in looking at automation of the bastion deployments. Check out this ansible code:

https://github.com/het-tanis/stream_setup/blob/master/roles/bastion_deploy/tasks/main.yml- Does the setup make sense to you with our deployment?

- What can improve and make this better?

-

Find a blog or github where someone else deploys a bastion. Compare it to our process.

-

Knowing what you now know about bastions, jails, and air-gapped systems. Reflect on the first 3 weeks, all the STIGs you've reviewed and touched. Do any of them seem moot, or less necessary if applied in an air-gapped environment?

- Does your answer change if you read about Zero Trust and know how much of a hot

topic that is in the security world now?

- Why or why not?

- Does your answer change if you read about Zero Trust and know how much of a hot

topic that is in the security world now?

-

Think of a Linux system where you would like to deploy a bastion (If you cannot think of one, use ProLUG Lab). Draw out how you think the system works in excalidraw.com.

Reflection Questions

-

Does it matter if the user knows that they are jailed? Why or why not?

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/ )

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

PreLAB

Review lab diagram for the Bastion design.

LAB

This lab is designed to have the engineer practice securing a Linux environment by the use of bastion hosts and jailing users as they enter an air-gapped environment.

Jailing a User

-

Follow the lab here answering the questions below as you progress: https://killercoda.com/het-tanis/course/Linux-Labs/204-building-a-chroot-jail

-

If you were to write out the high level steps of building a chroot jail, what would they be?

-

Think about what you did in the lab and what extra (or less) you might give a user/process.

- What directories are needed?

- What executables might you give the jailed user/process?

- If you give an executable, why is it important to give the link libraries that it uses?

- What are the special files that you made with mknod and why must they be there? (try removing them or redoing the lab without them. How does it break?)

Building a Bastion

-

Follow the lab here: https://killercoda.com/het-tanis/course/Linux-Labs/210-building-a-bastion-host

-

If you were to write out the high level steps of building a bastion host, what would they be?

-

When you jump into the bastion host, do you have any options other than the one you have given yourself?

-

How did you test that you couldn't leave the jailed environment?

- How effective do you think this is as a technical preventative control against user breakout in the jail, having a 20 second timeout?

Digging Deeper challenge (not required for finishing lab)

-

Fix the drawing from the lab with excalidraw and properly replace it here: https://github.com/het-tanis/prolug-labs/tree/main/Linux-Labs/210-building-a-bastion-host

-

Do a pull request and get some github street cred or something.

Be sure to

rebootthe lab machine from the command line when you are done.

Overview

Where software originates—and how and when it is updated (patched)—is essential to maintaining system stability and security. Every patch applied to a system must come from a known and trusted source, as introducing changes into a stable environment can have significant consequences. Administrators and engineers ensure that patching is planned and scheduled using verified, trackable repositories and resources.

In this unit, we will examine how this process is implemented in adjacent distributions, where administrators can apply granular control to Red Hat Package Manager (RPM) packages and maintain internal repositories of vetted packages.

Learning Objectives

- Understand the importance of package integrity.

- Understand patching techniques and routines.

- Understanding automated methods of patching.

- Understanding custom internal package repositories.

Relevance and Context

For security engineers, controlling the origin and integrity of software updates is a foundational practice for minimizing attack surfaces. By managing internal repositories and applying strict control over RPM packages, organizations can enforce compliance, prevent supply chain attacks, and ensure only trusted, audited software enters production environments.

Prerequisites

To be successful, students should have a working understanding of skills and tools including:

- Basic directory navigation skills.

- Ability to edit and manage configuration files.

- Basic knowledge of STIG.

- Basic knowledge of Ansible.

Key Terms and Definitions

Patching

Repos

Software

- EPEL

- BaseOS v. Appstream (in RHEL/Rocky)

- Other types you can find?

httpd

patching

GPG Key

DNF/YUM

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://public.cyber.mil/stigs/downloads/

- https://httpd.apache.org/

- https://docs.rockylinux.org/books/admin_guide/13-softwares/

- https://sig-core.rocky.page/documentation/patching/patching/

- https://wiki.rockylinux.org/rocky/repo/

- https://www.sans.org/information-security-policy/

- https://www.redhat.com/en/blog/whats-epel-and-how-do-i-use-it/

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 5 Recording

Discussion Post #1

Review the rocky documentation on Software management in Linux.

- What do you already understand about the process?

- What new things did you learn or pick up?

- What are the DNF plugins? What is the use of the versionlock plugin?

- What is an EPEL? Why do you need to consider this when using one?

Discussion Post #2

Do a google search for "patching enterprise Linux" and try to wade through all of the noise.

- What blogs (or AI) do you find that enumerates a list of steps or checklists to consider?

- After looking at that, how does patching a fleet of systems in the enterprise differ from pushing "update now" on your local desktop? What seems to be the major considerations? What seems to be the major roadblocks?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

Patching

Repos

Software

EPEL

BaseOS v. Appstream (in RHEL/Rocky)

Other types you can find?

- httpd

- patching

- GPG Key

- DNF/YUM

Digging Deeper

- After completing the lab and worksheet, draw out how you would deploy a software repository into your system. How are you going to update it? What tools do you find that are useful in this space?

Reflection Questions

-

Why is it that repos are controlled by root/admin functions and not any user, developer, or manager?

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/ )

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

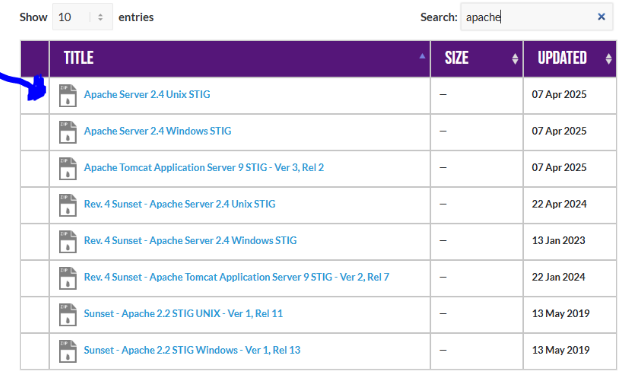

PreLAB

Download the STIG Viewer 2.18 from - https://public.cyber.mil/stigs/downloads/

Download the STIG for Apache 2.4 and the import it into your STIG viewer

Create a checklist from the opened STIG for Apache 2.4

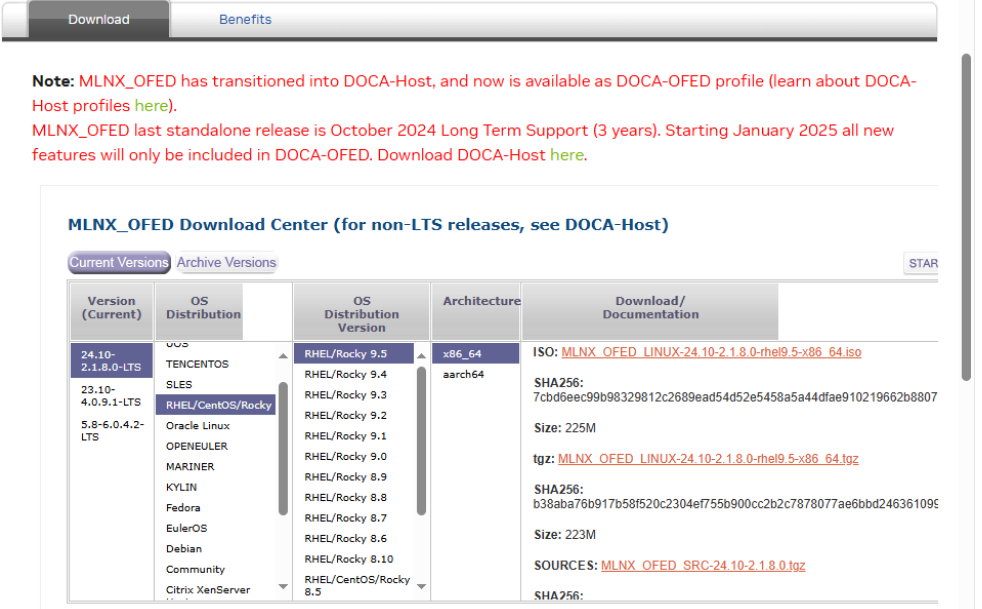

Review the software download process for Mellanox drivers:

Linux InfiniBand Drivers

Look through the available downloads and see if you can find the currently available

LTS for Rocky 9.5 x86_64.

After that find a distribution of your choice and play with their tool.

LAB

This lab is designed to have the engineer practice deploying patches in a Linux environment. The engineer will create repos and then deploy patches through an automated enterprise level Ansible playbook. But first, the engineer will review some of the Apache 2.4 STIG requirements if they want to run their own repo on their network.

Apache STIGs Review

-

Look at the 4 STIGs for "tls"

- What file is fixed for all of them to be remediated?

-

Install httpd on your Hammer server

systemctl stop wwclient dnf install -y httpd systemctl start httpd -

Check STIG V-214234

-

What is the problem?

-

What is the fix?

-

What type of control is being implemented?

-

Is it set properly on your system?

-

-

Check STIG V-214248

-

What is the problem?

-

What is the fix?

-

What type of control is being implemented?

-

Is it set properly on your system?

-

How do you think SELINUX will help implement this control in an enforcing state? Or will it not affect it?

-

Building repos

-

Start out by removing all your active repos

cd /etc/yum.repos.d mkdir old_archive mv *.repo old_archive dnf repolist -

Mount the local repository and make a local repo

mount -o loop /lab_work/repos_and_patching/Rocky-9.5-x86_64-dvd.iso /mnt df -h # Should see the mount point ls -l /mnt touch /etc/yum.repos.d/rocky9.repo vi /etc/yum.repos.d/rocky9.repoAdd the repo configuration:

[BaseOS] name=BaseOS Packages Rocky Linux 9 metadata_expire=-1 gpgcheck=1 enabled=1 baseurl=file:///mnt/BaseOS/ gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release [AppStream] name=AppStream Packages Rocky Linux 9 metadata_expire=-1 gpgcheck=1 enabled=1 baseurl=file:///mnt/AppStream/ gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-releaseSave with

esc :wqor "shift + ZZ"- Do the paths you're using here make sense to you based off what you saw

when using the

ls -l? Why or why not?

chmod 644 /etc/yum.repos.d/rocky9.repo dnf clean all - Do the paths you're using here make sense to you based off what you saw

when using the

-

Test the local repository.

dnf repolist dnf --disablerepo="*" --enablerepo="AppStream" list available- Approximately how many are available?

dnf --disablerepo="*" --enablerepo="AppStream" list available | nl dnf --disablerepo="*" --enablerepo="AppStream" list available | nl | headNow use BaseOS.

dnf --disablerepo="*" --enablerepo="BaseOS" list available- Approximately how many are available?

dnf --disablerepo="*" --enablerepo="BaseOS" list available | nl dnf --disablerepo="*" --enablerepo="BaseOS" list available | nl | head-

Try to install something

dnf --disablerepo="*" --enablerepo="BaseOS AppStream" install gimp # hit "n"-

How many packages does it want to install?

-

How can you tell they're from different repos?

-

-

Share out the local repository for your internal systems (tested on just this one system)

rpm -qa | grep -i httpd systemctl status httpd ss -ntulp | grep 80 lsof -i :80 cd /etc/httpd/conf.d vi repos.confEdit the file:

<Directory "/mnt"> Options Indexes FollowSymLinks AllowOverride None Require all granted </Directory> Alias /repo /mnt <Location /repo> Options Indexes FollowSymLinks AllowOverride None Require all granted </Location>Restart the service.

systemctl restart httpd vi /etc/yum.repos.d/rocky9.repoEdit the file with your lab's name in the

baseurl:###USE YOUR HAMMER MACHINE IN BASEURL### [BaseOS] name=BaseOS Packages Rocky Linux 9 metadata_expire=-1 gpgcheck=1 enabled=1 #baseurl=file:///mnt/BaseOS/ baseurl=http://hammer25/repo/BaseOS/ gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release [AppStream] name=AppStream Packages Rocky Linux 9 metadata_expire=-1 gpgcheck=1 enabled=1 #baseurl=file:///mnt/AppStream/ baseurl=http://hammer25/repo/AppStream/ gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release- Do the paths you've modified at

baseurlmake sense to you? If not, what do you need to better understand?

dnf clean all dnf repolist Try to install something dnf --disablerepo="*" --enablerepo="BaseOS AppStream" install gimp - Do the paths you've modified at

Enterprise patching

-

Complete the killercoda lab found here: https://killercoda.com/het-tanis/course/Ansible-Labs/102-Enterprise-Ansible-Patching

- Look at the roles, in the order the are run in the playbook.

- Does it make sense how the custom facts are used? What other custom facts might you use?

- What are the prechecks doing? What other ones might you add?

- What does the reboot task do, and how does it check for reboot to be needed?

- Look at the roles, in the order the are run in the playbook.

Digging Deeper challenge (not required for finishing lab)

-

You've set up a local repository and you've shared that repo out to other systems that might want to connect. Why might you need this if you're going to fully air-gap systems?

Is it still necessary even if your enterprise patching solution is well designed? Why or why not? -

Can you add the Mellanox ISO that is included in the

/lab_work/repos_and_patchingsection to be a repository that your systems can access? If you have trouble, troubleshoot and ask the group for help. -

Make a pull request to improve the enterprise patching tool that you just used. Write up, for the group, why you need that change and how it improves the efficacy of the patching.

Be sure to

rebootthe lab machine from the command line when you are done.

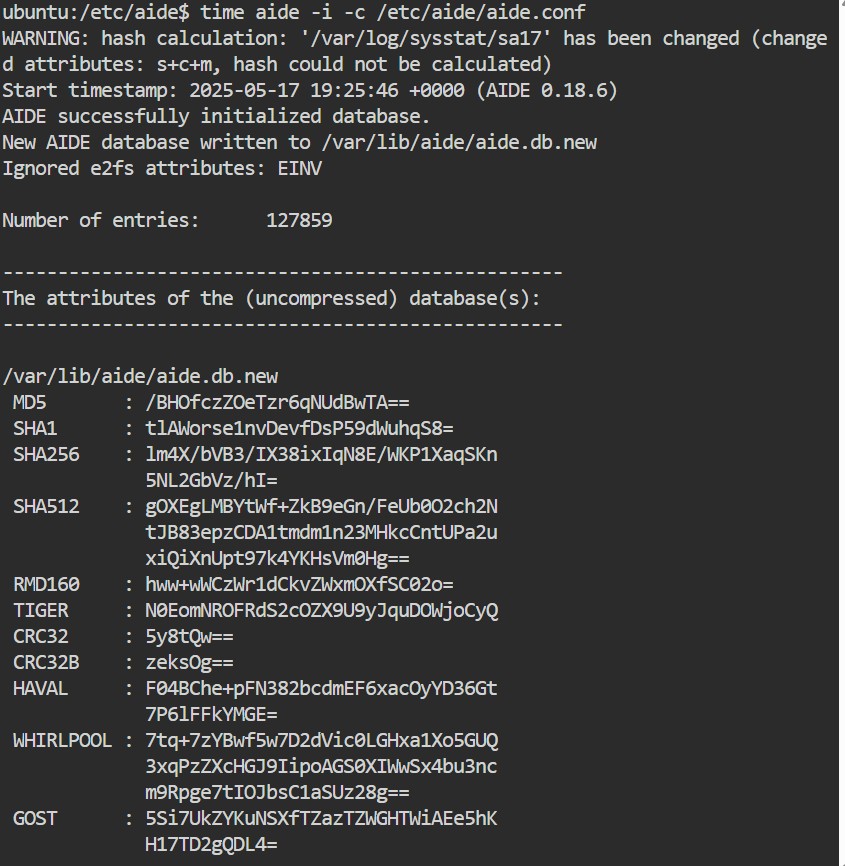

Overview

Monitoring and parsing logs is one of the most essential security engineering practices in any production environment.

This unit explores how logs are generated, formatted, collected, and analyzed across various layers of the infrastructure stack, from applications to operating systems to networks.

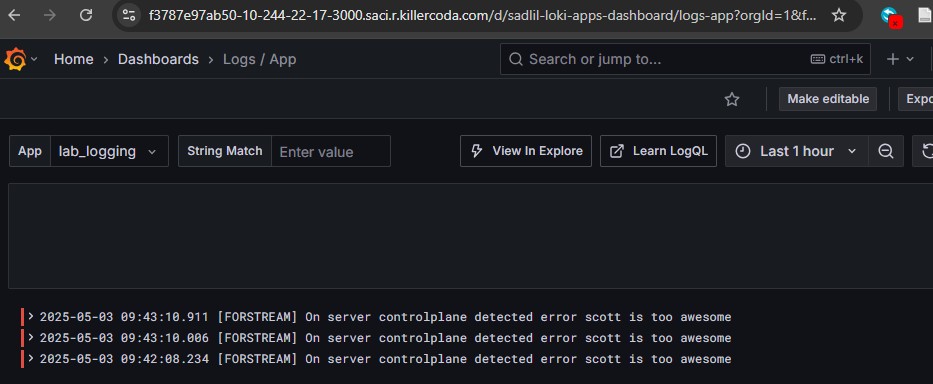

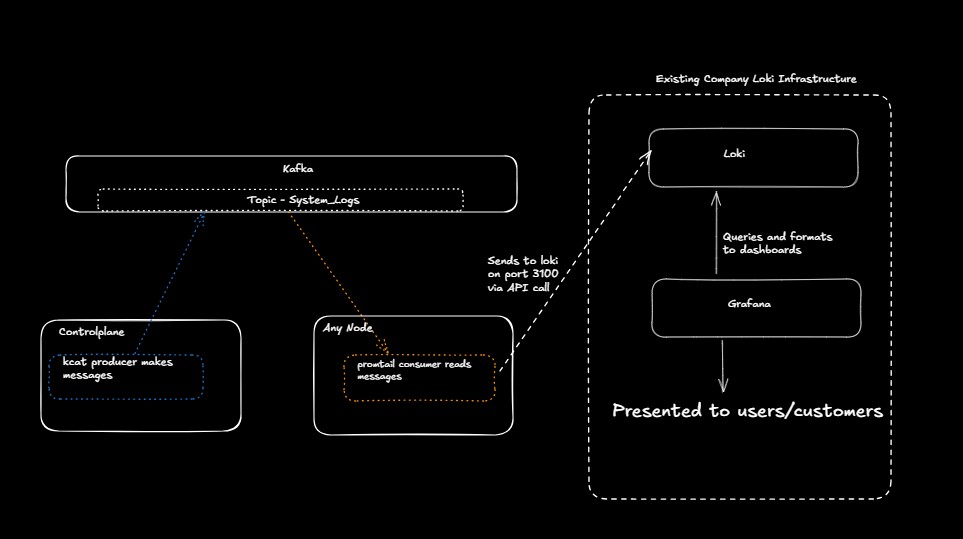

Students will gain an operational understanding of how to identify log sources, use modern tools for log aggregation and search (such as Loki), and develop awareness of log structure, integrity, and retention requirements.

Learning Objectives

By the end of Unit 6, students will:

- Understand the different types of logs and their role in system and security monitoring.

- Identify log structures (e.g., RFC 3164, RFC 5424,

journald) and apply appropriate parsing techniques. - Explore and configure log aggregation pipelines using modern tools like Grafana Loki.

- Analyze real-world security events using log data and query languages.

- Learn how log immutability and integrity contribute to reliable forensics and compliance.

Relevance & Context

Logs are often the first and best source of truth when diagnosing an incident,

auditing a system, or responding to a breach.

Without well-structured, searchable, and preserved logs, response teams are blind to

what actually happened.

This unit trains students to think like operators and defenders -- ensuring logs are complete, available, immutable, and actionable.

It builds directly on previous units around compliance and auditing, preparing learners to create scalable observability strategies that support both security and performance goals.

Prerequisites

Before beginning Unit 6, students should:

- Be comfortable working at the command line using

journalctl,grep,less, and related tools. - Understand system service management with

systemctl. - Have basic familiarity with syslog, log rotation, and the concept of standard input/output streams.

- Be able to interact with YAML and JSON-formatted configuration files.

- Have installed or downloaded STIG Viewer 2.18 for compliance reference.

Key terms and Definitions

Types of Logs

- Application Logs

- Host Logs

- Network Logs

- Database Logs

Log Structure

- RFC 3164 BSD Syslog

- RFC 5424 IETF Syslog

- Systemd Journal

Log Rotation

Log Aggregation

- ELK Stack

- Splunk

- Loki

- Graylog

SIEM (Security Information and Event Management)

Instructions

Fill out this sheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- https://grafana.com/docs/loki/latest/query/analyzer/

- https://www.sans.org/information-security-policy/

- https://www.sans.org/blog/the-ultimate-list-of-sans-cheat-sheets/

- https://public.cyber.mil/stigs/downloads/

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 6 Recording

Discussion Post #1

Review chapter 15 of the SRE book: https://google.github.io/building-secure-and-reliable-systems/raw/ch15.html#collect_appropriate_and_useful_logs. There are 14 references at the end of the chapter. Follow them for more information. One of them: https://jvns.ca/blog/2019/06/23/a-few-debugging-resources/ should be reviewed for question "c".

- a. What are some concepts that are new to you?

- b. There are 5 conclusions drawn, do you agree with them? Would you add or remove anything from the list?

- c. In Julia Evan's debugging blog, which shows that debugging is just another form of troubleshooting, what useful things do you learn about the relationship between these topics? Are there any techniques you already do that this helps solidify for you?

Discussion Post #2

Read https://sre.google/sre-book/monitoring-distributed-systems/.

- What interesting or new things do you learn in this reading? What may you want to know more about?

- What are the "4 golden signals"?

- After reading these, why is immutability so important to logging? What do you think the other required items are for logging to be effective?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

Types of logs

- Application

- Host

- Network

- DB

Immutable

Structure of Logs

- RFC 3164 BSD Syslog

- RFC 5424 IETF Syslog

- Systemd Journal

Log rotation

Rsyslog

Log aggregation

- ELK

- Splunk

- Graylog

- Loki

SIEM

Digging Deeper

-

Find a cloud service and see what their logging best practices are for security incident response. Here is AWS: https://aws.amazon.com/blogs/security/logging-strategies-for-security-incident-response/

- What are the high level concepts mentioned?

- What are the tools available and what actions do they take?

- What are the manual and automated query capabilities provided, and how they help you rapidly get to a correct assessment of the logged events?

-

Open up that STIG Viewer and filter by "logging" for any of the previous STIGs we've worked on. (Mariadb has some really good ones.)

- What seems to be a common theme?

- What types of activities MUST be logged in various applications and operating systems?

- Does it make sense why all logins are tracked?

- Does it make sense why all admin actions, even just attempted admin actions, are logged?

Reflection Questions

-

What architectures have you used in your career?

- If you haven't yet worked with any of these, what do you think you would architect in the ProLUG lab (~60 virtual machines, 4 physical machines, 1 NFS share, and 2 Windows laptops?)

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Required Materials

Putty or other connection tool Lab Server

Root or sudo command access

STIG Viewer 2.18 (download from https://public.cyber.mil/stigs/downloads/ )

Downloads

The lab has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

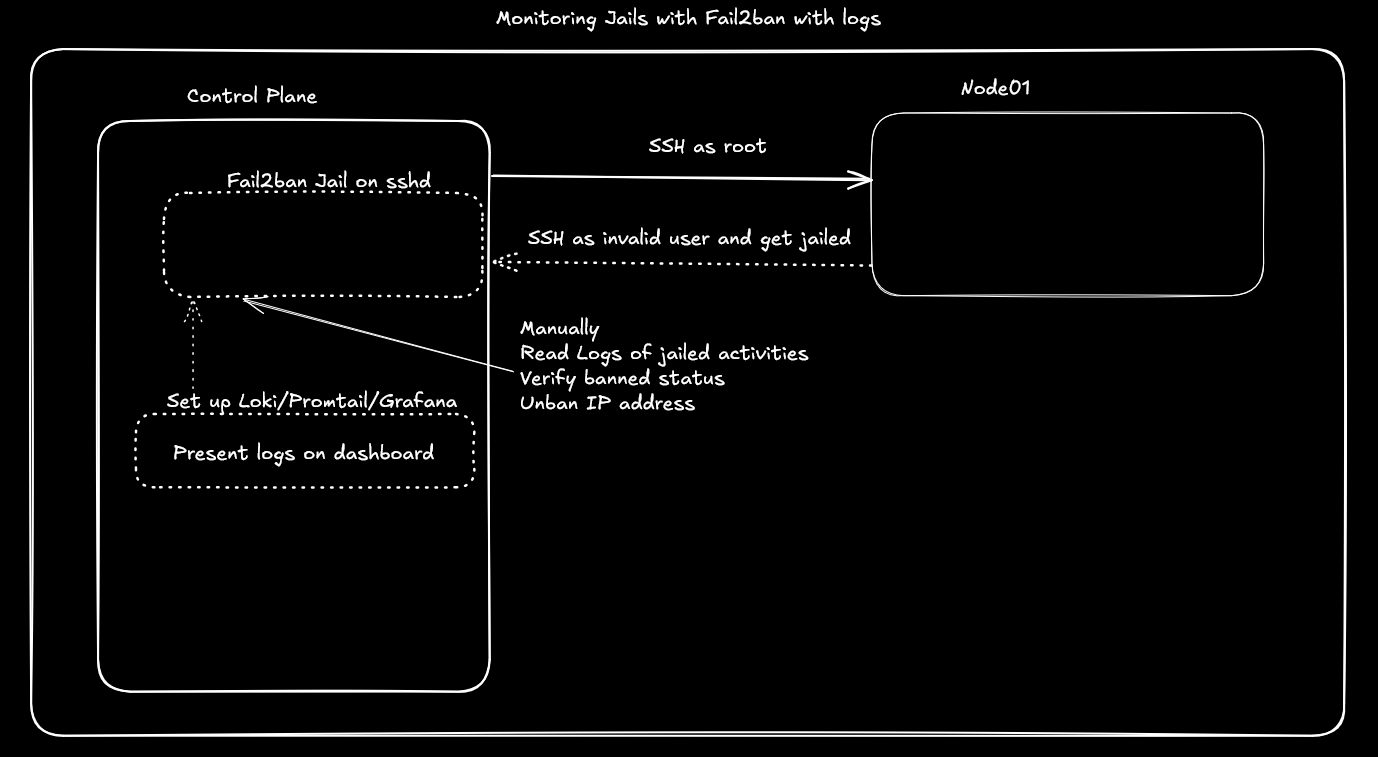

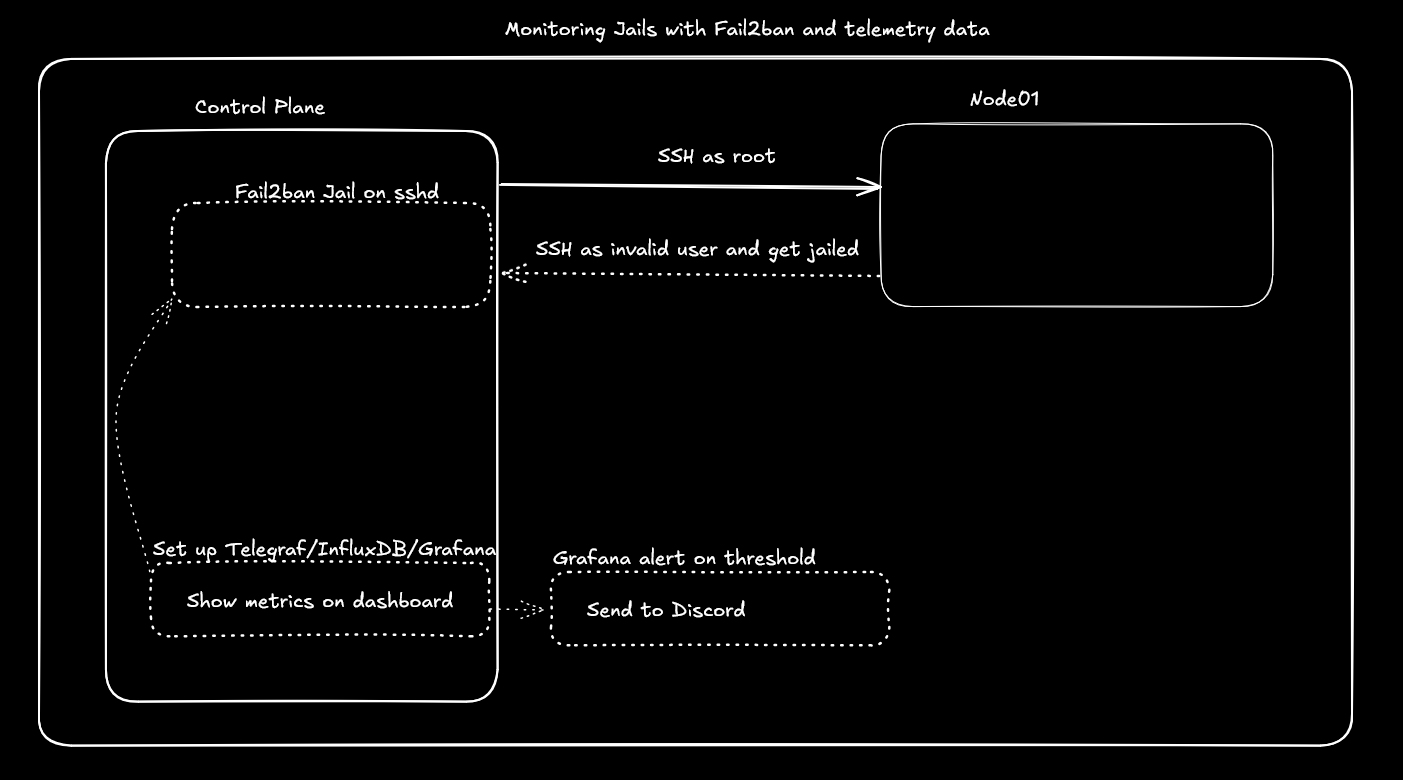

Lab 🧪

In keeping with the lab for this week, there are 4 major architectures for collecting and storing logs. Within these architectures exist many mutations from the archetype that solve different problems addressed in the scale, reliability, real-time analysis, budget, expertise, compliance, and existing infrastructure of the systems being logged.

This lab will touch 3 of the 4 types of architectures, so that the learner understands the deployment and capabilities. The 4th, cloud, architecture type will be optionally completed by the learner for their cloud deployment of choice. The learner can then reflect on the tradeoff of why one or another of these tools may be the right choice in their organization or not.

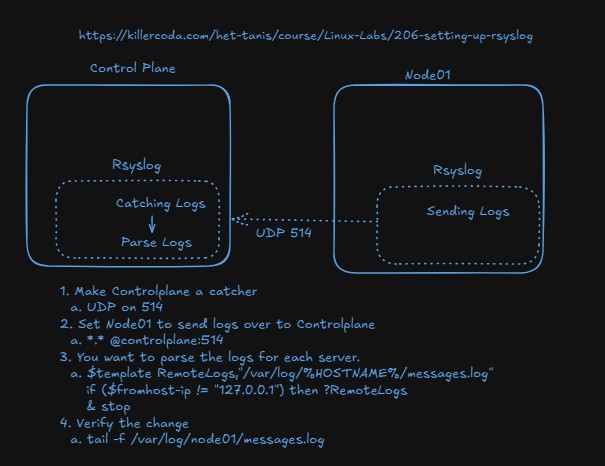

Rsyslog forwarding and collection

- Consider this architecture, where all modern Linux systems have built in rsyslog capabilities. One of them can be set to "catch" or aggregate all logs and then any number of servers can send over to them.

-

Complete the lab: https://killercoda.com/het-tanis/course/Linux-Labs/206-setting-up-rsyslog

-

Why do we split out the logs in this lab? Why don't we just aggregated them to one place?

- What do we split them out by?

- How does that template configuration work?

-