Welcome to the ProLUG Enterprise Linux Systems Administration Course Book.

This Book

Contains all materials pertaining to the course including links to external resources. It has been put together with care by a number of ProLUG group members referencing original instructional materials produce by Scott Champine (het_tanis).

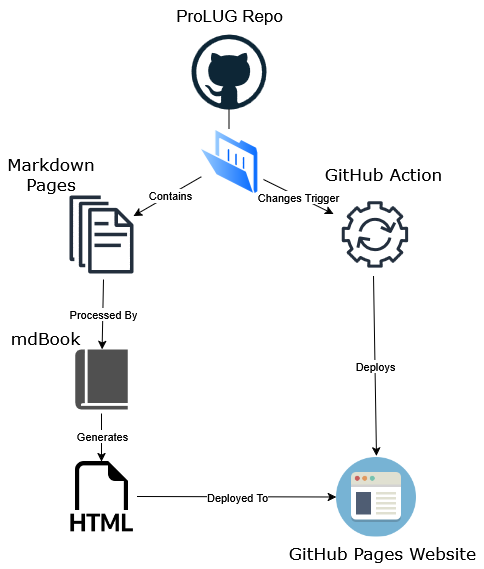

The content is version controlled with Git and stored here: https://github.com/ProfessionalLinuxUsersGroup/lac/

Furthermore, the book has been built with mdbook for ease of navigation. Be sure to try the search functionality.

Course Description:

This course addresses how the Linux systems work for administration level tasks inside a corporate environment. This course will explore everything from the administration of a Linux server and fundamental command line tasks to advanced topics such as patching and web administration.

Prerequisite(s) and/or Co-requisite(s):

Prerequisites: None

Credit hours: N/A

Contact hours: 120 (50 Theory Hours, 70 Lab Hours)

Course Summary

Major Instructional Areas

- Server build and Hardware components

- Command Line tools and Syntax

- Basic Scripting

- Linux networking

- Linux security practices

- Automation and repeating tasks

- Implement Networking in Linux

- Troubleshooting

- Benchmarking and Baselining

Course Objectives

- Explain the server build process and hardware system components.

- Analyze system security and implement basic hardening of system.

- Construct command line syntax to explore the system and gather resource information.

- Construct scripting structures of assigning variables, conditional tests, and recording output to generate scripts that do basic system tasks.

- Analyze and troubleshoot the Apache Web Server

- Analyze and troubleshoot the NFS/Samba File Shares.

- Analyze Docker and Kubernetes components and workflows.

- Describe and troubleshoot network services.

- Write and perform Ansible tasks to automate deployments to servers.

Learning Materials and References

Required Resources

Cloud Lab server running Ubuntu on Killercoda.

- Minimal resources can accomplish our tasks

- 1 CPU

- 2 GB Ram

- 30 GB Hard Drive

- Network Interface (IP already setup)

Local VM server running: RHEL, Fedora, Rocky

- Minimal resources

- 1 CPU

- 2GB RAM

- 3 3-5GB Hard Drives

- Network Interface (Bridged)

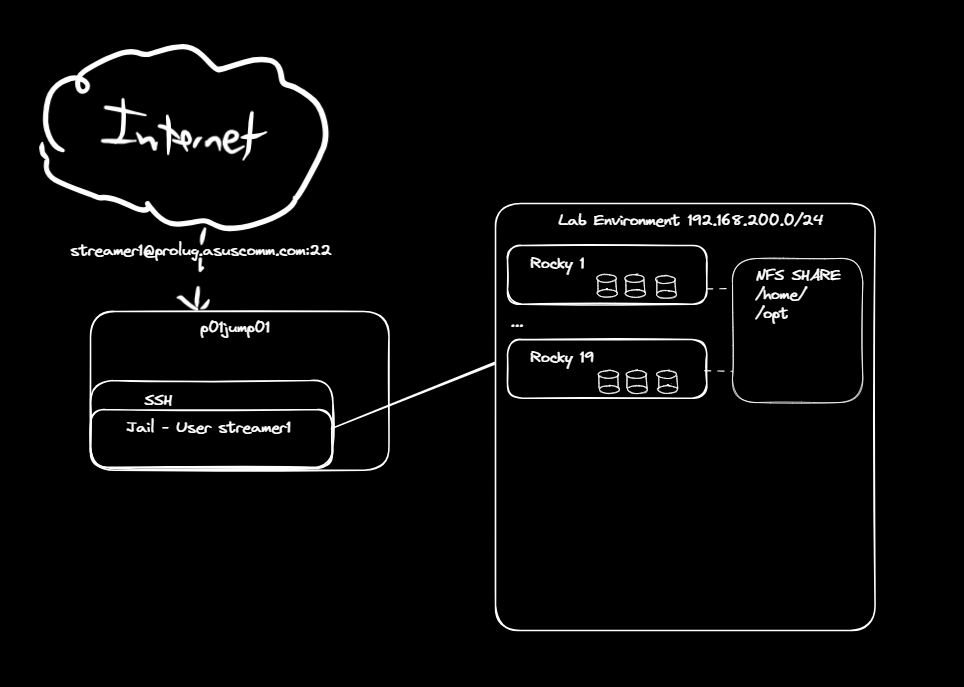

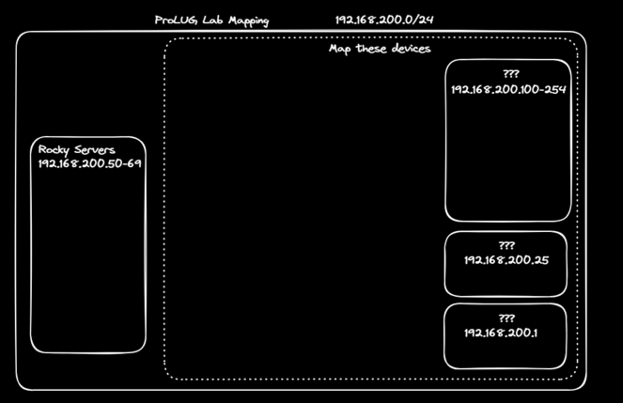

ProLUG Lab access to Rocky 9.4+ instance.

- Minimal resources can accomplish our tasks

- 1 CPU

- 4 GB RAM

- 15 GB Hard Drive

- 3 x 3GB hard drives (for raid and disk labs)

- Network Interface (IP already setup)

Course Plan

Instructional Methods

This course is designed to promote learner-centered activities and support the development of fundamental Linux skills. The course utilizes individual and group learning activities, performance-driven assignments, problem-based cases, projects, and discussions. These methods focus on building engaging learning experiences conducive to development of critical knowledge and skills that can be effectively applied in professional contexts.

Class size

This class will effectively engage 40-60 learners.

Class Schedule

Class will meet over weekend (Brown bag) sessions. 1 time per week, for 16 weeks. There will be a total of 16 sessions.

| Session | Topic |

|---|---|

| 1 | Get Linux Lab Access - CLI Primer - vi/vim/nano basics |

| 2 | Essential Tools - Files, Redirects, and Permissions |

| 3 | Storage - Logical Volume Management and RAID |

| 4 | Operating Running Systems |

| 5 | Security - Manage users and groups |

| 6 | Security - Firewalld/UFW |

| 7 | Security - Patching the system/ Package Management - yum, dnf, rpm |

| 8 | Scripting - System checks |

| 9 | Docker - K3s Setup and basics |

| 10 | K3s advanced w/ microservices |

| 11 | Monitoring systems |

| 12 | Engineering - System baselining/benchmarking and testing |

| 13 | System Hardening |

| 14 | Ansible Automation |

| 15 | Engineering Troubleshooting |

| 16 | Incident Response - Actual incident callout and information gathering |

Suggested Learning Approach

In this course, you will be studying individually and within a group of your peers, primarily in a lab environment. As you work on the course deliverables, you are encouraged to share ideas with your peers and instructor, work collaboratively on projects and team assignments, raise critical questions, and provide constructive feedback.

Students aiming to complete the Linux Systems Administration course are expected to devise and complete a capstone project, to be turned in at the end of the course.

The instructions, expectations, and deliverables for the project are listed on this page.

Instructions

-

Select a topic to research about a project that you are going to build.

Topics:

- System Stability

- System Performance

- System Security

- System monitoring

- Kubernetes

- Programming/Automation

-

Plan the project

- Find documentation or similar projects and build off of what was done there.

-

Document

- First pass, what does it take to build this?

-

Diagram

- Draw the thing

-

Build

- Get screen shots

- Make a video?

- Basically prove you built it.

-

Finalize documentation

- Redline the documentation

-

Prepare to Present (overleaf.com is a great alternative to Powerpoint)

- Setup a 15-20 slide deck on what you did

- Project purpose

- Diagram

- Build Process

- What did you learn?

- How are you going to apply this?

- Setup a 15-20 slide deck on what you did

-

Do any of you want to present?

- Let me (Scott) know and we’ll get you a slot in the last few weeks.

Deliverables

-

Build Documentation for your project that works in either the ProLUG labs, or in the Killercoda environment.

-

A diagram of what you built. This should be both a physical and a logical representation of the system (if applicable).

-

Examples of the running system, screen shots, or other proof that you built it and show it in a running state.

-

A 15-20 slide presentation of the above material that you would present to a group (presenting to us is voluntary, but definitely possible.)

Each course run through the Professional Linux Users Group (ProLUG) allows you to earn a certification upon completion.

Certificates are awarded to those who complete the course within the timeframe that it is being run through the ProLUG Discord.

- To see when courses are running, join the ProLUG Discord server and check the Events section.

If you aim to earn the certification for completing this course, you must follow the guidelines set forth in this document.

There are four main components to earning the certification.

Worksheet Completion

Each unit has a corresponding worksheet.

On this worksheet are discussion questions, terms/definitions, optional "digging

deeper" sections, and reflection questions.

These worksheets must be filled out and kept until the end of the course.

Upon reaching the end, they are to be submitted to the instructor (Scott Champine).

Worksheet Submission Format

The format in which you submit these worksheets is up to you.

Some students prefer to keep them in a GitHub repository, others prefer to just keep them as files on their machines and submit via email.

Discussion Questions

Each unit's worksheet contains multiple discussion questions.

Each discussion question has its own thread in the ProLUG Discord server, in the

#course-discussion-posts forum channel.

To qualify for certification, you must:

- Post your answer to each discussion question in the correct thread.

- Respond to another student's answer in the same thread.

The goal of this is not to create busywork, but to spark discussions and see things from other points of view.

Lab Completion

Each unit has a lab that is to be completed.

The labs, like the worksheets, should be also completed and saved until the end of the course.

These labs should be submitted to the instructor along with the worksheets in the same format of your choice.

Final Project

Each ProLUG course has students complete a capstone project.

This is a requirement for earning a ProLUG course certification.

The project must meet the standards set forth in the Final Project Outline (or otherwise be approved by the instructor, Scott Champine).

In the Beginning

Founded approximately 15 years ago, the Professional Linux User Group (ProLUG) began as a vision of Het Tanis, known by his community alias 'Scott Champine.' Het identified the need for an informal yet structured space where Linux professionals could share knowledge, collaborate, and grow together. What started as local in-person meetups quickly gained traction, thanks to the increasing demand for open-source collaboration and the widespread adoption of Linux in both enterprises and personal projects.

Why ProLUG Started

ProLUG was born out of the recognition that Linux professionals often face challenges that are best solved through peer collaboration and hands-on experience. The community’s founding principles were rooted in creating an environment where newcomers could learn from experienced professionals, and seasoned users could gain exposure to advanced topics and emerging technologies. Its core mission was simple yet impactful: to provide continuous growth opportunities in Linux system administration, automation, and cloud technologies.

Some of the key motivations behind ProLUG's formation include:

- Peer Support: Helping members solve technical challenges through discussion and advice from experts.

- Knowledge Sharing: Encouraging open sharing of tips, tricks, configurations, and scripts related to Linux and open-source tools.

- Hands-on Learning: Providing access to practical labs, exercises, and real-world scenarios for hands-on training.

- Community Mentorship: Offering a space for members to mentor and be mentored by others in different stages of their careers.

- Certification Prep: Assisting members in preparing for recognized industry certifications.

The Expansion into an Online Community

While initially focused on local in-person meetings, ProLUG embraced online platforms to extend its reach globally. The switch to a virtual model enabled:

- Global Networking: Professionals and enthusiasts from around the world could now connect, learn, and collaborate without geographical limitations.

- 24/7 Discussion: Via platforms like Discord, members could share insights, discuss Linux problems, and exchange ideas anytime, anywhere.

- Greater Diversity: The online expansion diversified the member base, incorporating individuals from various industries and technical backgrounds, creating a rich environment for problem-solving.

Interactive Labs and Training Programs

One of ProLUG’s most successful expansions has been its focus on interactive, hands-on labs. To bridge the gap between theory and practice, Het Tanis launched a series of labs on platforms like Killercoda, covering a variety of topics including:

- Linux Essentials and System Administration

- Ansible Automation

- Kubernetes and Container Orchestration

- Security and Network Hardening

With over 50 interactive labs available and more being continuously developed, members benefit from practical scenarios that simulate real-world challenges. The labs cater to beginners, intermediates, and experts, ensuring everyone has something to gain.

Certification and Career Development

In 2024, ProLUG launched its first structured certification course: Enterprise Linux Administration. This program was designed to provide a comprehensive curriculum covering topics such as:

- Advanced Linux system configuration

- Enterprise networking and services

- Security management

- Scripting and automation

The first cohort of graduates successfully completed the program in January 2025, marking a major milestone in ProLUG’s commitment to professional development. Many graduates have reported success stories, such as landing new jobs, securing promotions, or gaining confidence in their Linux expertise.

What is a User Group?

A user group is a community of individuals who come together to share common interests, typically in a specific area of technology, such as Linux. These groups can be local or online and serve as platforms for:

- Collaboration: Members work together to troubleshoot, build projects, and share experiences.

- Networking: Opportunities to connect with professionals, mentors, and employers within the field.

- Learning: Workshops, presentations, and discussions that cover new and emerging technologies.

- Career Growth: Access to resources, training programs, and job opportunities.

ProLUG is a prime example of how a user group can grow beyond its initial purpose, evolving into a vibrant global community with practical learning opportunities and real-world outcomes.

Success Stories

Being part of ProLUG has proven highly beneficial for many members, with success stories ranging from career advancements to personal growth:

- Job Opportunities: Members have found jobs in system administration, DevOps, and cloud engineering roles through networking within ProLUG.

- Certifications: Many members have successfully obtained Linux-related certifications, including RHCSA, RHCE, and LFCS, using ProLUG’s resources and mentorship programs.

- Skill Development: Through interactive labs and group discussions, members have honed skills in automation (Ansible), scripting (Bash, Python), containerization (Docker, Kubernetes), and more.

- Mentorship Relationships: Senior professionals have mentored newcomers, creating a cycle of continuous learning and knowledge sharing.

Current Milestones

- 3,000+ Members: ProLUG’s global community continues to grow rapidly, attracting Linux enthusiasts and professionals from various backgrounds.

- 50+ Interactive Labs: Covering diverse topics, from basic Linux administration to advanced enterprise systems management.

- Ongoing Training Programs: Continuous updates to certification preparation courses, interactive workshops, and guided lab exercises.

ProLUG’s commitment to fostering a collaborative environment has made it a go-to community for anyone interested in Linux. Whether you're a beginner looking to learn the basics or an experienced professional aiming to advance your career, ProLUG offers a pathway to success.

Overview

This unit introduces the foundational skills needed for effective Linux system administration with an emphasis on Red Hat Enterprise Linux (RHEL). It covers:

-

Command-Line Proficiency: Mastery of the shell environment is essential for routine tasks such as navigating the file system, managing processes, and automating scripts.

-

Text Editing with VI/Vim: Given that many RHEL systems use VI/Vim as the default editor for configuration and scripting, learners are introduced to these tools through practical exercises like using vimtutor and exploring interactive resources (e.g., VIM Adventures).

-

Understanding the Linux File System: The worksheet emphasizes the standard Linux file hierarchy—critical for managing files, permissions, and services in a Red Hat environment.

-

Basic Utilities and System Management: Along with the command-line and text editors, the unit touches on fundamental utilities that are pivotal for system configuration, troubleshooting, and maintenance on enterprise systems.

Learning Objectives

- Master Command-Line Fundamentals:

- Develop proficiency in navigating the Linux command-line interface (CLI) for everyday system management tasks.

- Learn how to execute commands to manipulate files, directories, and system processes efficiently.

- Understand the Linux File System:

- Grasp the structure and organization of the Linux file hierarchy.

- Comprehend how the file system affects system configuration, security, and troubleshooting on Red Hat platforms.

- Gain Proficiency in Text Editing with VI/Vim:

- Acquire hands-on experience with vi/vim through guided exercises (e.g., vimtutor, VIM Adventures).

- Learn to edit configuration files and scripts accurately, which is critical for system administration.

- Engage with Practical System Administration Tasks:

- Explore foundational utilities and commands essential for managing a Linux system.

- Apply theoretical knowledge through real-world examples, discussion posts, and interactive resources to reinforce learning.

These objectives are designed to ensure that learners not only acquire technical competencies but also understand how these skills integrate into broader system administration practices in a Red Hat environment.

Relevance & Context

The skills taught in this unit are indispensable for several reasons:

-

Efficient System Management:

The RHEL environment is typically managed via the command line. Proficiency in the CLI, along with an in-depth understanding of the file system, is crucial for daily tasks like system configuration, package management (using tools such as YUM or DNF), and remote troubleshooting. -

Security and Stability:

Editing configuration files, managing system services, and monitoring logs are all critical tasks that ensure the secure and stable operation of RHEL systems. A robust understanding of these basics is necessary to mitigate risks and ensure compliance with enterprise security standards. -

Professional Certification & Career Growth:

For those pursuing certifications like the Red Hat Certified System Administrator (RHCSA) or Red Hat Certified Engineer (RHCE), these foundational skills are not only testable requirements but also a stepping stone for more advanced topics such as automation (using Ansible), container management (with Podman or OpenShift), and performance tuning. -

Operational Excellence:

In enterprise settings where uptime and rapid incident response are paramount, having a solid grasp of these fundamentals enables administrators to quickly diagnose issues, apply fixes, and optimize system performance—thereby directly impacting business continuity and service quality.

Prerequisites

The unit assumes a basic level of computer literacy, meaning the learner is comfortable with fundamental computer operations. However, before achieving that level, one must have digital literacy. This involves:

-

Familiarity with Computer Hardware:

Understanding what a computer is, how to power it on/off, and how to use basic peripherals (keyboard, mouse, monitor). This foundational comfort enables users to interact with a computer effectively. -

Basic Software Navigation:

Knowing how to use common applications like web browsers, file managers, or simple text editors. This prior exposure helps learners transition into more specialized areas (like command-line interfaces) without being overwhelmed. -

Understanding Core Concepts:

Grasping the basic idea of files, directories, and simple interactions with the operating system lays the groundwork for later learning. Without this, even basic computer literacy may be hard to achieve.

Key terms and Definitions

Linux Kernel

Command-Line Interface (CLI)

Shell

Terminal

Filesystem Hierarchy

Package Manager (e.g., YUM/DNF)

Text Editors (VI/Vim)

Sudo

File Permissions and Ownership

Processes and Daemons

System Logs

Networking Basics

Bash Scripting

Instructions

Fill out the worksheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 1 Recording

Discussion Post #1

Using a 0-10 system, rate yourself on how well you think you know each topic in the table below. (You do not have to post this rating).

| Skill | High (8-10) | Mid (4-7) | Low (0-3) | Total |

|---|---|---|---|---|

| Linux | ||||

| Storage | ||||

| Security | ||||

| Networking | ||||

| Git | ||||

| Automation | ||||

| Monitoring | ||||

| Database | ||||

| Cloud | ||||

| Kubernetes | ||||

| Total |

Next, answer these questions here:

-

What do you hope to learn in this course?

-

What type of career path are you shooting for?

Discussion Post #2

-

Post a job that you are interested in from a local job website. (link or image)

-

What do you know how to do in the posting?

-

What don't you know how to do in the posting?

-

What are you doing to close the gap? What can you do to remedy the difference?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Start thinking about your project ideas (more to come in future weeks):

Topics:

- System Stability

- System Performance

- System Security

- System monitoring

- Kubernetes

- Programming/Automation

You will research, design, deploy, and document a system that improves your administration of Linux systems in some way.

Definitions

Kernel:

Kernel Args:

OS Version:

Modules:

Mount Points:

Text Editor:

Digging Deeper

-

Use vimtutor and see how far you get. What did you learn that you did not know about vi/vim?

-

Go to https://vim-adventures.com/ and see how far you get. What did you learn that you did not already know about vi/vim?

-

Go to https://www.youtube.com/watch?v=d8XtNXutVto and see how far you get with vim. What did you learn that you did not already know about vi/vim?

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you’ve learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Resources / Important Links

Required Materials

- Rocky 9.4+ - ProLUG Lab

- Or comparable Linux box

- root or sudo command access

Downloads

The lab has been provided for convenience below:

Pre-Lab Warm-Up

EXERCISES (Warmup to quickly run through your system and familiarize yourself)

mkdir lab_essentials

cd lab_essentials

ls

touch testfile1

ls

touch testfile{2..10}

ls

# What does this do differently?

# Can you figure out what the size of those files are in bytes? What command did you use?

touch file.`hostname`

touch file.`hostname`.`date +%F`

touch file.`hostname`.`date +%F`.`date +%s`

ls

# What do each of these values mean? `man date` to figure those values out.

# Try to set the following values in the file

# year, just two digits

# today's day of the month

# Just the century

date +%y

date +%e

date +%C

Lab 🧪

This lab is designed to help you get familiar with the basics of the systems you will be working on. Some of you will find that you know the basic material but the techniques here allow you to put it together in a more complex fashion.

It is recommended that you type these commands and do not copy and paste them. Word sometimes likes to format characters and they don’t always play nice with Linux.

Working with files:

# Creating empty files with touch

touch fruits.txt

ls -l fruits.txt

# You will see that fruits.txt exists and is a 0 length (bytes) file

-rw-r--r--. 1 root root 0 Jun 22 07:59 fruits.txt

# Take a look at those values and see if you can figure out what they mean.

# man touch and see if it has any other useful features you might use. If

# you’ve ever used tiered storage think about access times and how to keep data

# hot/warm/cold. If you haven’t just look around for a bit.

rm -rf fruits.txt

ls -l fruits.txt

# You will see that fruits.txt is gone.

Creating files just by stuffing data in them:

echo “grapes 5” > fruits.txt

cat fruits.txt

echo “apples 3” > fruits.txt

cat fruits.txt

echo “ “ > fruits.txt

echo “grapes 5” >> fruits.txt

cat fruits.txt

echo “apples 3” >> fruits.txt

cat fruits.txt

What is the difference between these two? Appending a file >> adds to the file whereas > just overwrites the file each write. Log files almost always are written with >>, we never > over those types of files.

Creating file with vi or vim:

# It is highly recommended the user read vimtutor. To get vimtutor follow

# these steps:

sudo -i

yum -y install vim

vimtutor

# There are about 36 short labs to show a user how to get around inside of vi.

# There are also cheat sheets around to help.

vi somefile.txt

# type “i” to enter insert mode

# Enter the following lines

grapes 5

apples 7

oranges 3

bananas 2

pears 6

pineapples 9

# hit the “esc” key at the top left of your keyboard

# Type “:wq”

# Hit enter

cat somefile.txt

Copying and moving files:

cp somefile.txt backupfile.txt

ls

cat backupfile.txt

mv somefile.txt fruits.txt

ls

cat fruits.txt

Look at what happened in each of these scenarios. Can you explain the difference between cp and mv? Read the manuals for cp and mv to see if there’s anything that may be useful to you. For most of us -r is tremendously useful option for moving directories.

Searching/filtering through files:

# So maybe we only want to see certain values from a file, we can filter

# with a tool called grep

cat fruits.txt

cat fruits.txt | grep apple

cat fruits.txt | grep APPLE

# read the manual for grep and see if you can cause it to ignore case.

# See if you can figure out how to both ignore case and only find the

# word apple at the beginning of the line.

# If you can’t, here’s the answer. Try it:

cat fruits.txt | grep -i "^apple"

Can you figure out why that worked? What do you think the ^ does? Anchoring is a common term for this. See if you can find what anchors to the end of a string.

Sorting files with sort:

# Let’s sort our file fruits.txt and look at what happens to the output

# and the original file

sort fruits.txt

cat fruits.txt

# Did the sort output come out different than the cat output? Did sorting

# your file do anything to your original data? So let’s sort our data again

# and figure out what this command does differently

sort -k 2 fruits.txt

# You can of course man sort to figure it out, but -k refers to the “key” and

# can be useful for sorting by a specific column

# But, if we cat fruits.txt we see we didn’t save anything we did. What if we

# wanted to save these outputs into a file. Could you do it? If you couldn’t,

# here’s an answer:

sort fruits.txt > sort_by_alphabetical.txt

sort -k 2 fruits.txt > sort_by_price.txt

# Cat both of those files out and verify their output

Advanced sort practice:

# Consider the command

ps -aux

# But that’s too long to probably see everything, so let’s use a command

# to filter just the top few lines

ps -aux | head

# So now you can see the actual fields (keys) across the top that we could sort by

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

# So let’s say we wanted to sort by %MEM

ps -aux | sort -k 4 -n -r | head -10

Read man to see why that works. Why do you suppose that it needs to be reversed to have the highest numbers at the top? What is the difference, if you can see any, between using the -n or not using it? You may have to use head -40 to figure that out, depending on your processes running.

Read man ps to figure out what other things you can see or sort by from the ps command. We will examine that command in detail in another lab.

Working with redirection:

The good thing is that you’ve already been redirecting information into files. The > and >> are useful for moving data into files. We have other functionality within redirects that can prove useful for putting data where we want it, or even not seeing the data.

Catching the input of one command and feeding that into the input of another command We’ve actually been doing this the entire time. “|” is the pipe operator and causes the output of one command to become the input of the second command.

cat fruits.txt | grep apple

# This cats out the file, all of it, but then only shows the things that

# pass through the filter of grep. We could continually add to these and make

# them longer and longer

cat fruits.txt | grep apple | sort | nl | awk ‘{print $2}’ | sort -r

pineapples

apples

cat fruits.txt | grep apple | sort | nl | awk '{print $3}' | sort -r

9

7

cat fruits.txt | grep apple | sort | nl | awk '{print $1}' | sort -r

2

1

# Take these apart by pulling the end pipe and command off to see what is

# actually happening:

cat fruits.txt | grep apple | sort | nl | awk '{print $1}' | sort -r

2

1

cat fruits.txt | grep apple | sort | nl | awk '{print $1}'

1

2

cat fruits.txt | grep apple | sort | nl

1 apples 7

2 pineapples 9

cat fruits.txt | grep apple | sort

apples 7

pineapples 9

cat fruits.txt | grep apple

apples 7

pineapples 9

See if you can figure out what each of those commands do.

Read the manual man command for any command you don’t recognize.

Use something you learned to affect the output.

Throwing the output into a file:

We’ve already used > and >> to throw data into a file but when we redirect like that we are catching it before it comes to the screen. There is another tool that is useful for catching data and also showing it to us, that is tee.

date

# comes to the screen

date > datefile

# redirects and creates a file datefile with the value

date | tee -a datefile

# will come to screen, redirect to the file.

Do a quick man on tee to see what the -a does. Try it without that value. Can you see any other useful options in there for tee?

Ignoring pesky errors or tossing out unwanted output:

Sometimes we don’t care when something errs out. We just want to see that it’s working or not. If you’re wanting to filter out errors (2) in the standarderr, you can do this

ls fruits.txt

# You should see normal output

ls fruity.txt

# You should see an error unless you made this file

ls fruity.txt 2> /dev/null

# You should no longer see the error.

# But, sometimes you do care how well your script runs against 100 servers,

# or you’re testing and want to see those errors. You can redirect that to a file, just as easy

ls fruity.txt 2> error.log

cat error.log

# You’ll see the error. If you want it see it a few times do the error line to see it happen.

In one of our later labs we’re going to look at stressing our systems out. For this, we’ll use a command that basically just causes the system to burn cpu cycles creating random numbers, zipping up the output and then throwing it all away. Here’s a preview of that command so you can play with it.

May have to yum -y install bzip2 for this next one to work.

time dd if=/dev/urandom bs=1024k count=20 | bzip2 -9 >> /dev/null

Use “crtl + c” to break if you use that and it becomes too long or your system is under too much load. The only numbers you can play with there are the 1024k and the count. Other numbers should be only changed if you use man to read about them first.

This is the “poor man’s” answer file. Something we used to do when we needed to answer some values into a script or installer. This is still very accurate and still works, but might be a bit advanced with a lot of advanced topics in here. Try it if you’d like but don’t worry if you don’t get this on the first lab.

vi testscript.sh

hit “i” to enter insert mode

add the following lines:

#!/bin/bash

read value

echo "The first value is $value"

read value

echo "The second value is $value"

read value

echo "The third value is $value"

read value

echo "The fourth value is $value"

# hit “esc” key

type in :wq

# hit enter

chmod 755 testscript.sh

# Now type in this (don’t type in the > those will just be there in your shell):

[xgqa6cha@N01APL4244 ~]$ echo "yes

> no

> 10

> why" | ./testscript.sh

> yes

> no

> 10

> why

What happened here is that we read the input from command line and gave it, in order to the script to read and then output. This is something we do if we know an installer wants certain values throughout it, but we don’t want to sit there and type them in, or we’re doing it across 100 servers quickly, or all kinds of reasons. It’s just a quick and dirty input “hack” that counts as a redirect.

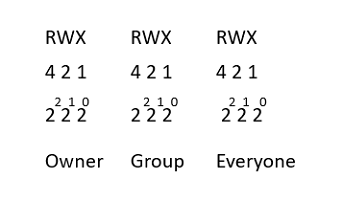

Working with permissions:

Permissions have to do with who can or cannot access (read), edit (write), or execute (xecute)files.

Permissions look like this.

ls -l

| Permission | # of Links | UID Owner | Group Owner | Size (b) | Creation Month | Creation Day | Creation Time | File Name |

|---|---|---|---|---|---|---|---|---|

| -rw-r--r--. | 1 | Root | root | 58 | Jun | 22 | 08:52 | datefile |

The primary permissions commands we’re going to use are going to be chmod (access) and chown (ownership).

A quick rundown of how permissions break out:

Let’s examine some permissions and see if we can’t figure out what permissions are allowed.

ls -ld /root/

# drwx------. 5 root root 4096 Jun 22 09:11 /root/

The first character lets you know if the file is a directory, file, or link. In this case we are looking at my home directory.

rwx: For UID (me).

- What permissions do I have?

---: For group.

- Who are they?

- What can my group do?

---: For everyone else.

- What can everyone else do?

Go find some other interesting files or directories and see what you see there. Can you identify their characteristics and permissions?

Be sure to

rebootthe lab machine from the command line when you are done.

NOTE: This is an optional bonus section. You do not need to read it, but if you're interested in digging deeper, this is for you.

Module 1: Getting Started (Days 1-2)

Day 1: First Contact with VIM

Segment 1: The Basics

- Complete first section of

vimtutor - Learn essential commands:

vim filename- Open/create filei- Enter insert mode (before the cursor)a- Enter insert mode (after the cursor)Esc- Return to normal mode:w- Save changes:q- Quit:wqorZZ- Save and quit:q!- Quit without saving

Segment 2: Building Muscle Memory

- Create five different files

- Practice mode switching 50 times

- Write and save content in each file

- Practice recovering from common mistakes:

- Accidentally pressed keys in normal mode

- Forgot to enter insert mode

- Trying to quit without saving

Segment 3: First Real Task

- Create a simple bash script template

- Add standard sections:

- Shebang line

- Comments

- Basic variables

- Simple functions

- Save and reopen multiple times

Day 2: Comfort Zone

Segment 1: More Basic Operations

- Complete second section of

vimtutor - Practice quick save and exit combinations

- Learn to read VIM messages and errors

- Understand modes in depth:

- Normal mode

- Insert mode

- Visual mode (introduction)

Segment 2: Error Recovery

- Create deliberate errors and fix them:

- Write without insert mode

- Exit without saving needed changes

- Get stuck in different modes

- Practice until you can recover without thinking

Segment 3: Real Config Practice

- Copy

/etc/hostsfile - Make various modifications:

- Add new host entries

- Modify existing entries

- Add comments

- Save different versions

Module 2: Navigation (Days 3-4)

Day 3: Basic Movement

Segment 1: Core Movement Commands

- Master the basics:

h- Leftj- Downk- Upl- Rightw- Next wordb- Previous word0- Line start$- Line end^- First non-blank character of the lineg_- Last non-blank character of the line

Segment 2: Movement Drills

- Create a "movement course" file

- Practice moving between marked points

- Time your navigation speed

- Compete against your previous times

Segment 3: Applied Navigation

- Navigate through

/etc/ssh/sshd_config:- Find specific settings

- Move between sections

- Locate comments

- Jump to line numbers

Day 4: Advanced Movement

Segment 1: Extended Movement

- Learn efficient jumps:

gg- File startG- File end{- Previous paragraph}- Next paragraphCtrl+f- Page downCtrl+b- Page up

Segment 2: Speed Training

- Work with a large configuration file

- Practice jumping between sections

- Find specific lines quickly

- Navigate through code blocks

Segment 3: Real-world Navigation

- Work with system logs

- Jump between error messages

- Navigate through long configuration files

- Practice quick file browsing

Module 3: Essential Editing (Days 5-7)

Day 5: Basic Editing

Segment 1: Edit Commands

- Master core editing:

x- Delete characterdd- Delete lineyy- Copy linep- Paste afterP- Paste beforeu- UndoCtrl + r- Redos- Substitute a characterr- Replace a characterc- Change character

Segment 2: Editing Drills

- Create practice documents

- Delete and replace text

- Copy and paste sections

- Practice undo/redo chains

Segment 3: System File Editing

- Work with

/etc/fstabcopy:- Add mount points

- Remove entries

- Comment lines

- Fix formatting

Day 6: Intermediate Editing

Segment 1: Combined Commands

- Learn efficient combinations:

dw- Delete wordd$- Delete to line endd0- Delete to line startcc- Change whole linecw- Change wordD- Delete to line endC- Change to line end

Segment 2: Practical Application

- Edit service configuration files

- Modify system settings

- Update network configurations

- Clean up log files

Segment 3: Speed Challenges

- Timed editing tasks

- Configuration file cleanup

- Quick text transformation

- Error correction sprints

Day 7: Editing Mastery

Segment 1: Advanced Operations

- Master text objects:

ciw- Change inner wordci"- Change inside quotesdi(- Delete inside parenthesesyi{- Yank inside bracesca"- Change a quotes blockda{- Delete a{}blockya(- Yank a()block

Segment 2: Integration Practice

- Combine all learned commands

- Work with multiple files

- Practice common scenarios

- Time your operations

Daily Success Metrics

By end of each day, you should be able to:

- Day 1: Open, edit, save, and exit files confidently

- Day 2: Understand and recover from common errors

- Day 3: Navigate small files without arrow keys

- Day 4: Move through large files efficiently

- Day 5: Perform basic edits without hesitation

- Day 6: Combine movement and editing commands

- Day 7: Edit configuration files with confidence

Practice Tips

- Use

vimtutorduring breaks - Disable arrow keys completely

- Keep a command log of new discoveries

- Time your editing operations

- Practice with real system files (copies)

Remember: Focus on accuracy first, then build speed.

Downloads

Overview

This unit centers on a focus on security and troubleshooting.

- The use of SELinux for implementing mandatory access controls, managing file permissions with ACLs (Access Control Lists),

- Understanding operational methodologies for incident triage.

Learning Objectives

-

Understand and Configure SELinux:

- Grasp the core concepts of SELinux, including security contexts, labels, and its role in enforcing mandatory access control.

- Learn how to configure and troubleshoot SELinux settings to ensure system security and compliance.

-

Master Access Control Lists (ACLs):

- Recognize the limitations of traditional Unix permissions and how ACLs provide granular control over file and directory access.

- Develop skills in applying and managing ACLs in a complex Linux environment.

-

Develop Effective Troubleshooting Methodologies:

- Acquire techniques to diagnose and resolve system access issues, particularly those arising from SELinux policies and ACL misconfigurations.

- Apply structured troubleshooting strategies to ensure minimal downtime and maintain high availability.

-

Integrate Theoretical Knowledge with Practical Application:

- Engage with interactive exercises, discussion prompts, and real-world scenarios to reinforce learning.

- Utilize external resources, such as technical documentation and instructional videos, to supplement hands-on practice.

-

Enhance Collaborative Problem-Solving Skills:

- Participate in peer discussions and reflective exercises to compare different approaches to system administration challenges.

- Learn to articulate and document troubleshooting processes and system configurations for continuous improvement.

-

Build a Foundation for Advanced Security Practices:

- Understand how SELinux and ACLs fit into the broader context of system security and operational stability.

- Prepare for more advanced topics by reinforcing the fundamental skills needed to manage and secure Red Hat Enterprise Linux environments.

These objectives aim to ensure that learners not only acquire specific technical skills but also develop a holistic understanding of how to secure and manage Linux systems in enterprise settings.

Relevance & Context

For Linux administrators and engineers, mastering SELinux and ACLs is essential because these tools add critical layers of security and control over system resources. By understanding how to use security contexts and labels, professionals can:

-

Enhance System Security: Implementing SELinux helps mitigate vulnerabilities by enforcing strict access controls.

-

Troubleshoot Access Issues: Knowledge of ACLs and SELinux enables the identification and resolution of permission-related issues, which are common in complex, multi-user environments.

-

Improve System Reliability: Understanding these concepts supports the broader goal of maintaining high availability and operational stability, especially when systems must operate under varying security configurations.

Prerequisites

Before engaging with this unit, readers should have a foundational understanding of:

-

Basic Linux Commands and File System Structure: Familiarity with navigating Linux directories, managing files, and using the terminal.

-

Traditional Unix Permissions: A solid grasp of the standard user/group/other permission model.

-

Fundamental Security Principles: An introductory knowledge of concepts like Discretionary Access Control (DAC) and Mandatory Access Control (MAC), which provide the groundwork for understanding SELinux.

-

Basic Troubleshooting Techniques: Experience with diagnosing and resolving common system issues will be beneficial when applying the methodologies discussed in the unit.

Key terms and Definitions

SELinux (Security-Enhanced Linux)

Access Control Lists (ACLs)

Security Contexts

Mandatory Access Control (MAC)

Discretionary Access Control (DAC)

Uptime

Standard Streams (stdin, stdout, stderr)

High Availability (HA)

Service Level Objectives (SLOs)

Troubleshooting Methodologies

Instructions

Fill out the worksheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

Downloads

The worksheet has been provided below. The document(s) can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 2 Recording

Unit 2 Discussion Post #1

Think about how week 1 went for you.

-

Do you understand everything that needs to be done?

-

Do you need to allocate more time to the course, and if so, how do you plan to do it?

-

How well did you take notes during the lecture? Do you need to improve this?

Unit 2 Discussion Post #2

Read a blog, check a search engine, or ask an AI about SELinux.

What is the significance of contexts? What are the significance of labels?

Scenario:

You follow your company instructions to add a new user to a set of 10 Linux servers. They cannot access just one of the servers.

When you review the differences in the servers you see that the server they cannot access is running SELINUX. On checking other users have no problem getting into the system.

You find nothing in the documentation (typical) about this different system or how these users are accessing it.

What do you do?

Where do you check?

You may use any online resources to help you answer this. This is not a trick and it is not a “one answer solution”. This is for you to think through.

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Start thinking about your project ideas (more to come in future weeks):

Topics:

- System Stability

- System Performance

- System Security

- System monitoring

- Kubernetes

- Programming/Automation

You will research, design, deploy, and document a system that improves your administration of Linux systems in some way.

Definitions

Uptime:

Standard input (stdin):

Standard output (stdout):

Standard error (stderr):

Mandatory Access Control (MAC):

Discretionary Access Control (DAC):

Security contexts (SELinux):

SELinux operating modes:

Digging Deeper

-

How does troubleshooting differ between system administration and system engineering? To clarify, how might you troubleshoot differently if you know a system was previously running correctly. If you’re building a new system out?

-

Investigate a troubleshooting methodology, by either Google or AI search. Does the methodology fit for you in an IT sense, why or why not?

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you’ve learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Resources / Important Links

Required Materials

- Putty or other connection tool

- Rocky 9.4+ - ProLUG Lab

- Or comparable Linux box

- root or sudo command access

Downloads

The lab has been provided for convenience below:

Pre-Lab Warm-Up

EXERCISES (Warmup to quickly run through your system and familiarize yourself)

cd ~

ls

mkdir evaluation

mkdir evaluation/test/round6

# This fails, can you find out why?

mkdir -p evaluation/test/round6

# This works, think about why?

cd evaluation

pwd

# What is the path you are in?

touch testfile1

ls

# What did this do?

touch testfile{2..10}

ls

# What did this do differently than earlier?

# touch .hfile .hfile2 .hfile3

ls

# Can you see your newest files? Why or why not? (man ls)

# What was the command to let you see those hidden files?

ls -l

# What do you know about this long listing? Think about 10 things this can show you.

# Did it show you all the files or are some missing?

Lab 🧪

This lab is designed to help you get familiar with the basics of the systems you will be working on. Some of you will find that you know the basic material but the techniques here allow you to put it together in a more complex fashion.

It is recommended that you type these commands and do not copy and paste them. Word sometimes likes to format characters and they don’t always play nice with Linux.

Gathering system information:

hostname

cat /etc/*release

# What do you recognize about this output? What version of RHEL (CENTOS) are we on?

uname

uname -a

uname -r

# man uname to see what those options mean if you don’t recognize the values

Check the amount of RAM:

cat /proc/meminfo

free

free -m

# What do each of these commands show you? How are they useful?

Check the number of processors and processor info:

cat /proc/cpuinfo

# What type of processors do you have? How many are there? (counting starts at 0)

cat /proc/cpuinfo | grep proc | wc -l

# Does this command accurately count the processors?

Check Storage usage and mounted filesystems:

df

# But df is barely readable, so find the option that makes it more readable `man df`

df -h

df -h | grep -i var

# What does this show, or search for? Can you invert this search? (hint `man grep`

# look for invert or google “inverting grep’s output”)

df -h | grep -i sd

# This one is a little harder, what does this one show? Not just the line, what are

# we checking for? (hint if you need it, google “what is /dev/sda in linux”)

mount

# Mount by itself gives a huge amount of information. But, let’s say someone is asking

# you to verify that the mount is there for /home on a system. Can you check that

# quickly with one command?

mount | grep -i home

#This works, but there is a slight note to add here. Just because something isn’t

# individually mounted doesn’t mean it doesn’t exist. It just means it’s not part of

# it’s own mounted filesystem.

mount | grep -i /home/xgqa6cha

# will produce no output

df -h /home/xgqa6cha

# will show you that my home filesystem falls under /home.

cd ~; pwd; df -h .

# This command moves you to your home directory, prints out that directory,

# and then shows you what partition your home directory is on.

du -sh .

# will show you space usage of just your directory

try `du -h .` as well to see how that ouput differs

# read `man du` to learn more about your options.

Check the system uptime:

uptime

man uptime

# Read the man for uptime and figure out what those 3 numbers represent.

# Referencing this server, do you think it is under high load? Why or why not?

Check who has recently logged into the server and who is currently in:

last

# Last is a command that outputs backwards. (Top of the output is most recent).

# So it is less than useful without using the more command.

last | more

# Were you the last person to log in? Who else has logged in today?

w

who

whoami

# how many other users are on this system? What does the pts/0 mean on google?

Check who you are and what is going on in your environment:

printenv

# This scrolls by way too fast, how would you search for your home?

printenv | grep -i home

whoami

id

echo $SHELL

Check running processes and services:

ps -aux | more

ps -ef | more

ps -ef | wc -l

Check memory usage and what is using the memory:

# Run each of these individually for understanding before we look at part b.

free -m

free -m | egrep “Mem|Swap”

free -m| egrep “Mem|Swap” | awk ‘{print $1, $2, $3}’

free -t | egrep "Mem|Swap" | awk '{print $1 " Used Space = " ($3 / $2) * 100"%"}'

# Taking this apart a bit:

# You’re just using free and searching for the lines that are for memory and swap

# You then print out the values $1 = Mem or Swap

# You then take $3 used divided by $2 total and multiply by 100 to get the percentage

Have you ever written a basic check script or touched on conditional statements or loops? (Use ctrl + c to break out of these):

while true; do free -m; sleep 3; done

# Watch this output for a few and then break with ctrl + c

# Try to edit this to wait for 5 seconds

# Try to add a check for uptime and date each loop with a blank line between

# each and 10 second wait:

while true; do date; uptime; free -m; echo “ “; sleep 10; done

# Since we can wrap anything inside of our while statements, let’s try adding

# something from earlier:

while true; do free -t | egrep "Mem|Swap" | awk '{print $1 " Used Space = " ($3 / $2) * 100"%"}'; sleep 3; done

seq 1 10

# What did this do?

# Can you man seq to modify that to count from 2 to 20 by 2’s?

# Let’s make a counting for loop from that sequence

for i in `seq 1 20`; do echo "I am counting i and am on $i times through the loop"; done

Can you tell me what is the difference or significance of the $ in the command above? What does that denote to the system?

Be sure to

rebootthe lab machine from the command line when you are done.

Overview

The unit focuses on understanding and implementing techniques to ensure systems remain operational with minimal downtime.

-

The process of quickly assessing, prioritizing, and addressing system incidents.

-

Leveraging performance indicators (KPIs, SLIs) and setting clear operational targets (SLOs, SLAs) to guide troubleshooting and recovery efforts.

Learning Objectives

-

Understand Fundamental Concepts of System Reliability and High Availability:

-

Explain the importance of uptime and the implications of “Five 9’s” availability in mission-critical environments.

-

Define key terms such as Single Point of Failure (SPOF), Mean Time to Detect (MTTD), Mean Time to Recover (MTTR), and Mean Time Between Failures (MTBF).

-

-

Identify and Apply High Availability Architectures:

-

Differentiate between Active-Active and Active-Standby configurations and describe their advantages and trade-offs.

-

Evaluate real-world scenarios to determine where redundancy and clustering (using tools like Pacemaker and Corosync) can improve system resilience.

-

-

Develop Incident Triage and Response Skills:

-

Outline a structured approach to incident detection, prioritization, and resolution.

-

Use performance metrics (KPIs, SLIs, SLOs, and SLAs) to guide decision-making during operational incidents.

-

-

Integrate Theoretical Knowledge with Practical Application:

-

Leverage external resources (such as AWS whitepapers, Google SRE documentation, and Red Hat guidelines) to deepen understanding of system reliability best practices.

-

Participate in interactive discussion posts and collaborative problem-solving exercises to reinforce learning.

-

-

Cultivate Analytical and Troubleshooting Abilities:

-

Apply systematic troubleshooting techniques to diagnose and resolve system issues.

-

Reflect on incident case studies and simulated exercises to improve proactive prevention strategies.

-

These learning objectives are designed to ensure that participants not only grasp the theoretical underpinnings of system reliability and high availability but also build the practical skills needed for effective incident management and system optimization in a professional Linux environment.

Relevance & Context

-

Ensuring Mission-Critical Uptime: Minimizing downtime is critical, and high availability strategies help ensure continuous service—even in the face of hardware or software failures.

-

Optimized Incident Management: A well-practiced incident triage process enables administrators to quickly diagnose issues, reduce system downtime, and mitigate potential service interruptions.

-

Designing Resilient Architectures: For a Red Hat Systems Administrator, understanding how to build redundancy (using techniques like Active-Active or Active-Standby clustering) and eliminate Single Points of Failure (SPOFs) is key to creating robust systems.

-

Data-Driven Decision Making: Leveraging metrics such as KPIs, SLIs, SLOs, and SLAs allows administrators to set measurable goals, monitor performance, and make informed decisions about system improvements.

-

Integration with Enterprise Tools:

Red Hat environments often utilize specific tools (such as Pacemaker and Corosync for clustering, and Ansible for configuration management) that align with the concepts taught in this unit. Mastery of these principles helps engineers integrate and optimize these tools effectively within their infrastructure.

Prerequisites

Before engaging with this unit, readers should have a foundational understanding of:

-

Basic Networking Concepts: Familiarity with the principles of networking (such as IP addressing, DNS, and basic network troubleshooting) is crucial because many Linux administration tasks involve network configuration and monitoring.

-

Text Editing and Scripting Basics: An introductory exposure to editing text (using simple editors) and the idea of writing or running small scripts helps prepare learners for more complex shell operations.

-

Version Control (Git): Since the learning material and collaborative discussions use GitHub, understanding Git and markdown is beneficial.

-

Problem-Solving: A general troubleshooting mindset, including the ability to search documentation, diagnose issues systematically, and apply corrective measures.

Key terms and Definitions

Resilience Engineering

Fault Tolerance

Proactive Monitoring

Observability

Incident Response

Root Cause Analysis (RCA)

Disaster Recovery (DR)

Error Budgeting

Capacity Planning

Load Balancing Service Continuity

Infrastructure as Code (IaC)

Configuration Management

Preventive Maintenance

DevOps Culture

Instructions

Fill out the worksheet as you progress through the lab and discussions. Hold your worksheets until the end to turn them in as a final submission packet.

Resources / Important Links

- Google SRE Book - Implementing SLOs

- AWS High Availability Architecture Guide

- Red Hat High Availability Cluster Configuration

Downloads

The worksheet has been provided below. The document can be transposed to

the desired format so long as the content is preserved. For example, the .txt

could be transposed to a .md file.

Unit 3 Recording

Discussion Post #1

Scan the chapter here for keywords and pull out what you think will help you to better understand how to triage an incident.

Read the section called "Operation Security" in this same chapter: Building Secure and Reliable Systems

- What important concepts do you learn about how we behave during an operational response to an incident?

Discussion Post #2

Ask Google, find a blog, or ask an AI about high availability. (Here's one if you need it: AWS Real-Time Communication Whitepaper

- What are some important terms you read about? Why do you think understanding HA will help you better in the context of triaging incidents?

The discussion posts are done in Discord threads. Click the 'Threads' icon on the top right and search for the discussion post.

Definitions

Five 9's:

Single Point of Failure (SPOF):

Key Performance Indicators (KPIs):

Service Level Indicator (SLI):

Service Level Objective (SLO):

Service Level Agreement (SLA):

Active-Standby:

Active-Active:

Mean Time to Detect (MTTD):

Mean Time to Recover/Restore (MTTR):

Mean Time Between Failures (MTBF):

Digging Deeper

-

If uptime is so important to us, why is it so important to us to also understand how our systems can fail? Why would we focus on the thing that does not drive uptime?

-

Start reading about SLOs: Implementing SLOs How does this help you operationally? Does it make sense that keeping systems within defined parameters will help keep them operating longer?

Reflection Questions

-

What questions do you still have about this week?

-

How are you going to use what you've learned in your current role?

If you are unable to finish the lab in the ProLUG lab environment we ask you

rebootthe machine from the command line so that other students will have the intended environment.

Resources / Important Links

Required Materials

- Rocky 9.4+ - ProLUG Lab

- Or comparable Linux box

- root or sudo command access

Downloads

The lab has been provided for convenience below:

Pre-Lab Warm-Up

EXERCISES (Warmup to quickly run through your system and familiarize yourself)

cd ~

mkdir lvm_lab

cd lvm_lab

touch somefile

echo “this is a string of text” > somefile

cat somefile

echo “this is a string of text” > somefile

# Repeat 3 times

cat somefile

# How many lines are there?

Echo “this is a string of text” >> somefile

# Repeat 3 times

cat somefile

# How many lines are there?

# cheat with `cat somefile | wc -l`

echo “this is our other test text” >> somefile

# Repeat 3 times

cat somefile | nl

# How many lines are there?

cat somefile | nl | grep test

# compare that with 14

cat somefile | grep test | nl

If you want to preserve positional lines in file (know how much you’ve cut out when you grep something, or generally be able to find it in the unfiltered file for context, always | nl | before your grep

Pre Lab - Disk Speed tests:

When using the ProLUG lab environment, you should always check that there

are no other users on the system w or who.

After this, you may want to check the current state of the disks, as they retain

their information even after a reboot resets the rest of the machine. lsblk /dev/xvda.

# If you need to wipe the disks, you should use fdisk or a similar partition utility.

fdisk /dev/xvda

p #print to see partitions

d #delete partitions or information

w #Write out the changes to the disk.

This is an aside, before the lab. This is a way to test different read or writes into or out of your filesystems as you create them. Different types of raid and different disk setups will give different speed of read and write. This is a simple way to test them. Use these throughout the lab in each mount for fun and understanding.

Write tests (saving off write data - rename /tmp/file each time):

# Check /dev/xvda for a filesystem

blkid /dev/xvda

# If it does not have one, make one

mkfs.ext4 /dev/xvda

mkdir /space # (If you don’t have it. Lab will tell you to later as well)

mount /dev/xvda /space

Write Test:

for i in `seq 1 10`; do time dd if=/dev/zero of=/space/testfile$i bs=1024k count=1000 | tee -a /tmp/speedtest1.basiclvm; done

Read tests:

for i in `seq 1 10`; do time dd if=/space/testfile$i of=/dev/null; done

Cleanup:

for i in `seq 1 10`; do rm -rf /space/testfile$i; done

If you are re-creating a test without blowing away the filesystem, change the name or counting numbers of testfile because that’s the only way to be sure there is not some type of filesystem caching going on to optimize. This is especially true in SAN write tests.

Lab 🧪

start in root (#); cd /root

LVM explanation and use within the system:

# Check physical volumes on your server (my output may vary)

fdisk -l | grep -i xvd

# Disk /dev/xvda: 15 GiB, 16106127360 bytes, 31457280 sectors

# Disk /dev/xvdb: 3 GiB, 3221225472 bytes, 6291456 sectors

# Disk /dev/xvdc: 3 GiB, 3221225472 bytes, 6291456 sectors

# Disk /dev/xvde: 3 GiB, 3221225472 bytes, 6291456 sectors

Looking at Logical Volume Management:

Logical Volume Management is an abstraction layer that looks a lot like how we carve up SAN disks for storage management. We have Physical Volumes that get grouped up into Volume Groups. We carve Volume Groups up to be presented as Logical Volumes.

Here at the Logical Volume layer we can assign RAID functionality from our Physical Volumes attached to a Volume Group or do all kinds of different things that are “under the hood”. Logical Volumes get filesystems formatting and are mounted to the OS.

There are many important commands for showing your physical volumes, volume groups, and logical volumes.

The three simplest and easiest are:

pvs

vgs

lvs

With these you can see basic information that allows you to see how the disks are allocated. Why do you think there is no output from these commands the first time you run them? Try these next commands to see if you can figure out what is happening? To see more in depth information try pvdisplay, vgdisplay, and lvdisplay.

If there is still no output, it’s because this system is not configured for LVM. You will notice that none of the disk you verified are attached are allocated to LVM yet. We’ll do that next.

Creating and Carving up your LVM resources:

Disks for this lab are /dev/xvdb, /dev/xvdc, and /dev/xvdd. (but verify before continuing and adjust accordingly.)

We can do individual pvcreates for each disk pvcreate /dev/xvdb but we can also

loop over them with a simple loop as below. Use your drive letters.

for disk in b c d; do pvcreate /dev/xvd$disk; done

# Physical volume "/dev/xvdb" successfully created.

# Creating devices file /etc/lvm/devices/system.devices

# Physical volume "/dev/xvdc" successfully created.

# Physical volume "/dev/xvde" successfully created.

# To see what we made:

pvs

# PV VG Fmt Attr PSize PFree

# /dev/xvdb lvm2 --- 3.00g 3.00g

# /dev/xvdc lvm2 --- 3.00g 3.00g

# /dev/xvde lvm2 --- 3.00g 3.00g

vgcreate VolGroupTest /dev/xvdb /dev/xvdc /dev/xvde

# Volume group "VolGroupTest" successfully created

vgs

# VG #PV #LV #SN Attr VSize VFree

# VolGroupTest 3 0 0 wz--n- <8.99g <8.99g

lvcreate -l +100%FREE -n lv_test VolGroupTest

# Logical volume "lv_test" created.

lvs

# LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

# lv_test VolGroupTest -wi-a----- <8.99g

# Formatting and mounting the filesystem

mkfs.ext4 /dev/mapper/VolGroupTest-lv_test

# mke2fs 1.42.9 (28-Dec-2013)

# Filesystem label=

# OS type: Linux

# Block size=4096 (log=2)

# Fragment size=4096 (log=2)

# Stride=0 blocks, Stripe width=0 blocks

# 983040 inodes, 3929088 blocks

# 196454 blocks (5.00%) reserved for the super user

# First data block=0

# Maximum filesystem blocks=2151677952

# 120 block groups

# 32768 blocks per group, 32768 fragments per group

# 8192 inodes per group

# Superblock backups stored on blocks:

# 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208

#

# Allocating group tables: done

# Writing inode tables: done

# Creating journal (32768 blocks): done

# Writing superblocks and filesystem accounting information: done

mkdir /space #Created earlier

vi /etc/fstab

# Add the following line

# /dev/mapper/VolGroupTest-lv_test /space ext4 defaults 0 0

# reload fstab

systemctl daemon-reload

If this command works, there will be no output. We use the df -h in the next command to verify the new filesystem exists. The use of mount -a and not manually mounting the filesystem from the command line is an old administration trick I picked up over the years.

By setting our mount in /etc/fstab and then telling the system to mount everything we verify that this will come back up properly during a reboot. We have mounted and verified we have a persistent mount in one step.

df -h

# Filesystem Size Used Avail Use% Mounted on

# devtmpfs 4.0M 0 4.0M 0% /dev

# tmpfs 2.0G 0 2.0G 0% /dev/shm

# tmpfs 2.0G 8.5M 1.9G 1% /run

# tmpfs 2.0G 1.4G 557M 72% /

# tmpfs 2.0G 0 2.0G 0% /run/shm

# 192.168.200.25:/home 44G 15G 30G 34% /home

# 192.168.200.25:/opt 44G 15G 30G 34% /opt

# tmpfs 390M 0 390M 0% /run/user/0

# /dev/mapper/VolGroupTest-lv*test 8.8G 24K 8.3G 1% /space

Good place to speed test and save off your data.

Removing and breaking down the LVM to raw disks:

The following command is one way to comment out the line in /etc/fstab. If you had to do this across multiple servers this could be useful. (Or you can just use vi for simplicity).

grep lv_test /etc/fstab; perl -pi -e "s/\/dev\/mapper\/VolGroupTest/#removed \/dev\/mapper\/VolGroupTest/" /etc/fstab; grep removed /etc/fstab

# /dev/mapper/VolGroupTest-lv_test /space ext4 defaults 0 0

#removed dev/mapper/VolGroupTest-lv_test /space ext4 defaults 0 0

umount /space

lvremove /dev/mapper/VolGroupTest-lv_test

# Do you really want to remove active logical volume VolGroupTest/lv_test? [y/n]: y

# Logical volume "lv_test" successfully removed

vgremove VolGroupTest

# Volume group "VolGroupTest" successfully removed

for disk in c e f; do pvremove /dev/sd$disk; done

# Labels on physical volume "/dev/sdc" successfully wiped.

# Labels on physical volume "/dev/sde" successfully wiped.

# Labels on physical volume "/dev/sdf" successfully wiped.

Use your pvs;vgs;lvs commands to verify those volumes no longer exist.

pvs;vgs;lvs

# PV VG Fmt Attr PSize PFree

# /dev/sda2 VolGroup00 lvm2 a-- 17.48g 4.00m

# /dev/sdb VolGroup01 lvm2 a-- 20.00g 96.00m

# VG #PV #LV #SN Attr VSize VFree

# VolGroup00 1 9 0 wz--n- 17.48g 4.00m

# VolGroup01 1 1 0 wz--n- 20.00g 96.00m

# LV VG Attr LSize Pool Origin Data% Meta% Move Log

# LogVol00 VolGroup00 -wi-ao---- 2.50g

# LogVol01 VolGroup00 -wi-ao---- 1000.00m

# LogVol02 VolGroup00 -wi-ao---- 5.00g

# LogVol03 VolGroup00 -wi-ao---- 1.00g

# LogVol04 VolGroup00 -wi-ao---- 5.00g

# LogVol05 VolGroup00 -wi-ao---- 1.00g

# LogVol06 VolGroup00 -wi-ao---- 1.00g

# LogVol07 VolGroup00 -wi-ao---- 512.00m

# LogVol08 VolGroup00 -wi-ao---- 512.00m

# lv_app VolGroup01 -wi-ao---- 19.90g

More complex types of LVM:

LVM can also be used to raid disks

Create a RAID 5 filesystem and mount it to the OS (For brevity’s sake we will be limiting show commands from here on out, please use pvs,vgs,lvs often for your own understanding)

for disk in c e f; do pvcreate /dev/sd$disk; done

# Physical volume "/dev/sdc" successfully created.

# Physical volume "/dev/sde" successfully created.

# Physical volume "/dev/sdf" successfully created.

vgcreate VolGroupTest /dev/sdc /dev/sde /dev/sdf

lvcreate -l +100%FREE --type raid5 -n lv_test VolGroupTest

mkfs.xfs /dev/mapper/VolGroupTest-lv_test

vi /etc/fstab

# fix the /space directory to have these parameters (change ext4 to xfs)

/dev/mapper/VolGroupTest-lv_test /space xfs defaults 0 0

df -h

# Filesystem Size Used Avail Use% Mounted on

# /dev/mapper/VolGroup00-LogVol08 488M 34M 419M 8% /var/log/audit

# /dev/mapper/VolGroupTest-lv_test 10G 33M 10G 1% /space

Since we’re now using RAID 5 we would expect to see the size no longer match the full 15GB, 10GB is much more of a RAID 5 value 66% of raw disk space.

To verify our raid levels we use lvs

lvs

# LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync

# LogVol00 VolGroup00 -wi-ao---- 2.50g

# LogVol01 VolGroup00 -wi-ao---- 1000.00m

# LogVol02 VolGroup00 -wi-ao---- 5.00g

# LogVol03 VolGroup00 -wi-ao---- 1.00g

# LogVol04 VolGroup00 -wi-ao---- 5.00g

# LogVol05 VolGroup00 -wi-ao---- 1.00g

# LogVol06 VolGroup00 -wi-ao---- 1.00g

# LogVol07 VolGroup00 -wi-ao---- 512.00m

# LogVol08 VolGroup00 -wi-ao---- 512.00m

# lv*app VolGroup01 -wi-ao---- 19.90g

# lv_test VolGroupTest rwi-aor--- 9.98g 100.00

Spend 5 min reading the man lvs page to read up on raid levels and what they can accomplish.

To run RAID 5 3 disks are needed. To run RAID 6 at least 4 disks are needed.

Good place to speed test and save off your data

Set the system back to raw disks:

Unmount /space and remove entry from /etc/fstab

lvremove /dev/mapper/VolGroupTest-lv_test

# Do you really want to remove active logical volume VolGroupTest/lv_test? [y/n]: y

# Logical volume "lv_test" successfully removed

vgremove VolGroupTest

# Volume group "VolGroupTest" successfully removed

for disk in c e f; do pvremove /dev/sd$disk; done

# Labels on physical volume "/dev/sdc" successfully wiped.

# Labels on physical volume "/dev/sde" successfully wiped.

# Labels on physical volume "/dev/sdf" successfully wiped.

Working with MDADM as another RAID option:

There could be a reason to use MDADM on the system. For example you want raid handled outside of your LVM so that you can bring in sets of new disks already raided and treat them as their own Physical Volumes. Think, “I want to add another layer of abstraction so that even my LVM is unaware of the RAID levels.” This has special use case, but is still useful to understand.

May have to install mdadm yum: yum install mdadm

Create a raid5 with MDADM:

mdadm --create -l raid5 /dev/md0 -n 3 /dev/sdc /dev/sde /dev/sdf

# mdadm: Defaulting to version 1.2 metadata

# mdadm: array /dev/md0 started.

Add newly created /dev/md0 raid to LVM:

This is same as any other add. The only difference here is that LVM is unaware of the lower level RAID that is happening.

pvcreate /dev/md0

# Physical volume "/dev/md0" successfully created.

vgcreate VolGroupTest /dev/md0

# Volume group "VolGroupTest" successfully created

lvcreate -l +100%Free -n lv_test VolGroupTest

# Logical volume "lv_test" created.

lvs

# LV VG Attr LSize Pool Origin Data% Meta% Move Log

# LogVol00 VolGroup00 -wi-ao---- 2.50g

# LogVol01 VolGroup00 -wi-ao---- 1000.00m

# LogVol02 VolGroup00 -wi-ao---- 5.00g

# LogVol03 VolGroup00 -wi-ao---- 1.00g

# LogVol04 VolGroup00 -wi-ao---- 5.00g

# LogVol05 VolGroup00 -wi-ao---- 1.00g

# LogVol06 VolGroup00 -wi-ao---- 1.00g

# LogVol07 VolGroup00 -wi-ao---- 512.00m

# LogVol08 VolGroup00 -wi-ao---- 512.00m

# lv_app VolGroup01 -wi-ao---- 19.90g

# lv_test VolGroupTest -wi-a----- 9.99g

Note that LVM does not see that it is dealing with a raid system, but the size is still 10g instead of 15g.

Fix your /etc/fstab to read

/dev/mapper/VolGroupTest-lv_test /space xfs defaults 0 0

mkfs.xfs /dev/mapper/VolGroupTest-lv_test

# meta-data=/dev/mapper/VolGroupTest-lv_test isize=512 agcount=16, agsize=163712 blks

# = sectsz=512 attr=2, projid32bit=1

# = crc=1 finobt=0, sparse=0

# data = bsize=4096 blocks=2618368, imaxpct=25

# = sunit=128 swidth=256 blks

# naming =version 2 bsize=4096 ascii-ci=0 ftype=1

# log =internal log bsize=4096 blocks=2560, version=2

# = sectsz=512 sunit=8 blks, lazy-count=1

# realtime =none extsz=4096 blocks=0, rtextents=0

mount -a

Good place to speed test and save off your data

Setting the MDADM to persist through reboots:

(not in our lab environment though)

mdadm --detail --scan >> /etc/mdadm.conf

cat /etc/mdadm.conf

# ARRAY /dev/md0 metadata=1.2 name=ROCKY1:0 UUID=03583924:533e5338:8d363715:09a8b834

Verify with df -h ensure that your /space is mounted.

There is no procedure in this lab for breaking down this MDADM RAID.

You are root/administrator on your machine, and you do not care about the data on this RAID. Can you use the internet/man pages/or other documentation to take this raid down safely and clear those disks?

Can you document your steps so that you or others could come back and do this process again?

Be sure to

rebootthe lab machine from the command line when you are done.

NOTE: This is an optional bonus section. You do not need to read it, but if you're interested in digging deeper, this is for you.